- Research article

- Open access

- Published:

From concerns to benefits: a comprehensive study of ChatGPT usage in education

International Journal of Educational Technology in Higher Education volume 21, Article number: 35 (2024)

Abstract

Artificial Intelligence (AI) chatbots are increasingly becoming integral components of the digital learning ecosystem. As AI technologies continue to evolve, it is crucial to understand the factors influencing their adoption and use among students in higher education. This study is undertaken against this backdrop to explore the behavioral determinants associated with the use of the AI Chatbot, ChatGPT, among university students. The investigation delves into the role of ChatGPT’s self-learning capabilities and their influence on students’ knowledge acquisition and application, subsequently affecting the individual impact. It further elucidates the correlation of chatbot personalization with novelty value and benefits, underscoring their importance in shaping students’ behavioral intentions. Notably, individual impact is revealed to have a positive association with perceived benefits and behavioral intention. The study also brings to light potential barriers to AI chatbot adoption, identifying privacy concerns, technophobia, and guilt feelings as significant detractors from behavioral intention. However, despite these impediments, innovativeness emerges as a positive influencer, enhancing behavioral intention and actual behavior. This comprehensive exploration of the multifaceted influences on student behavior in the context of AI chatbot utilization provides a robust foundation for future research. It also offers invaluable insights for AI chatbot developers and educators, aiding them in crafting more effective strategies for AI integration in educational settings.

Introduction

In the realm of advanced language models, OpenAI’s Generative Pre-trained Transformer (GPT) has emerged as a groundbreaking tool that has begun to make significant strides in numerous disciplines (Biswas, 2023a, b; Firat, 2023; Kalla, 2023). Education, in particular, has become a fertile ground for this innovation (Kasneci et al., 2023). A standout instance is ChatGPT, the latest iteration of this transformative technology, which has been rapidly adopted by a large segment of university students across the globe (Rudolph et al., 2023). At its core, ChatGPT is armed with the ability to produce cohesive, contextually appropriate responses predicated on preceding dialogues, providing an interactive medium akin to human conversation (King & ChatGPT, 2023). This interactive capacity of ChatGPT has the potential to significantly restructure the educational landscape, altering the way students absorb, interact, and engage with academic content (Fauzi et al., 2023). Despite the burgeoning intrigue surrounding this technology and its applications, the comprehensive examination of determinants shaping students’ behavior in the adoption and usage of ChatGPT remains a largely uncharted territory. A thorough, systematic understanding of these determinants, both facilitative and inhibitive, is essential for the more effective deployment and acceptance of such a tool in educational settings, thereby contributing to its ultimate success and efficacy. The current study seeks to shed light on this crucial aspect, aiming to provide a nuanced comprehension of the array of factors that influence university students’ behavioral intentions and patterns towards utilizing ChatGPT for their educational pursuits.

ChatGPT is replete with remarkable features courtesy of its underlying technologies: machine learning (Rospigliosi, 2023) and natural language processing (Maddigan & Susnjak, 2023). Its capabilities span across a broad spectrum, from completing texts, answering questions, to even spawning original content. In the landscape of education, these functionalities morph into a powerful apparatus that could revolutionize traditional learning modalities. Similar to a human tutor, students can converse with ChatGPT—asking questions, eliciting explanations, or diving into profound discussions about their study materials. Beyond its conversational abilities, ChatGPT’s capacity to learn from past interactions enables it to refine its responses over time, catering more accurately to the user’s distinct needs and preferences (McGee, 2023). This attribute of continuous learning and adaptation paves the way for a more personalized and efficient learning experience, offering custom-made academic assistance (Aljanabi, 2023). This intricate combination of characteristics arguably makes ChatGPT a potent tool in the educational sphere, worthy of systematic examination to optimize its potential benefits and mitigate any challenges.

ChatGPT’s unique features manifest a range of enabling factors that can significantly influence its adoption among university students. One such factor is the self-learning attribute that empowers the AI to progressively enhance its performance (Rijsdijk et al., 2007). This feature aligns with the ongoing learning journey of students, potentially fostering a symbiotic learning environment. Another pivotal factor is the scope for knowledge acquisition and application. As ChatGPT assists in knowledge acquisition, students can simultaneously apply their newly garnered knowledge, potentially bolstering their academic performance. Personalization of the AI forms another significant enabling factor, ensuring that the interactions are fine-tuned to each student’s distinctive needs, thereby promoting a more individual-centered learning experience. Lastly, the novelty value that ChatGPT brings to the educational realm can stimulate student engagement, making learning an exciting and enjoyable process. Unraveling the influence of these factors on students’ behavior toward ChatGPT could yield valuable insights, paving the way for the tool’s effective implementation in education.

Alongside the positive influencers, some potential detractors could hinder the adoption of ChatGPT among university students. Among these, privacy concerns stand out as paramount (Lund & Wang, 2023; McCallum, 2023). With data breaches becoming increasingly common, students might be apprehensive about sharing their academic queries and discussions with an AI tool. Technophobia is another potential inhibitor, as not all students might be comfortable interacting with an advanced AI model. Moreover, some students might experience guilt feelings while using ChatGPT, equating its assistance to a form of ‘cheating’ (Anders, 2023). Evaluating these potential inhibitors is crucial to forming a comprehensive understanding of the factors that shape students’ behavior towards ChatGPT. This balanced approach could help identify ways to alleviate these concerns and foster wider adoption of this promising educational tool.

Despite the growing adoption of AI tools like ChatGPT, there is a notable gap in the literature pertaining to how these tools influence behavior in an educational context. Moreover, existing studies often fail to account for both enabling and inhibiting factors. Therefore, this paper endeavors to fill this gap by offering a more balanced and comprehensive exploration. The primary objective of this study is to empirically examine the determinants—both enablers and inhibitors—that influence university students’ behavioral intentions and actual behavior towards using ChatGPT. By doing so, this study seeks to make a valuable contribution to the academic discourse surrounding the use of AI in education, while also offering practical implications for its more effective implementation in educational settings.

The rest of this paper is structured as follows: the next section reviews the relevant literature and formulates the research hypotheses. The subsequent section outlines the research methodology, followed by a presentation and discussion of the results. Finally, the paper concludes with the implications for theory and practice, and suggestions for future research.

Theoretical background and hypothesis development

This study is grounded in several theoretical frameworks that inform the understanding of AI-based language models, like ChatGPT, and their impact on individual impact, benefits, behavioral intention, and behavior.

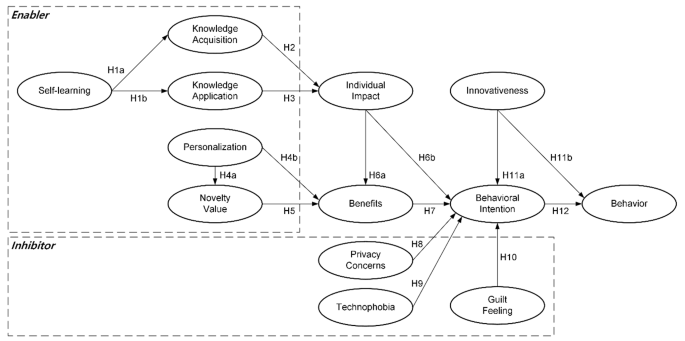

Figure 1 displays the research mode. The model asserts that the AI’s self-learning capability influences knowledge acquisition and application, which in turn impact individual users. Personalization of the AI and the novelty value it offers are also predicted to have substantial effects on the perceived benefits, influencing the behavioral intention to use AI, culminating in actual behavior. The model also takes into account potential negative influences such as perceived risk, technophobia, and feelings of guilt on the behavioral intention to use the AI. The last two constructs in the model, behavioral intention and innovativeness, are anticipated to influence the actual behavior of the AI.

Self-determination theory (SDT)

The SDT is indeed a critical theoretical foundation in this study. The theory, developed by Ryan and Deci (2000), posits that when tasks are intrinsically motivating and individuals perceive competence in these tasks, it leads to improved performance, persistence, and creativity. This theoretical framework supports the idea that the self-learning capability of AI, like ChatGPT, is vital in fostering intrinsic motivation among its users. Specifically, the self-learning aspect allows ChatGPT to tailor its interactions according to the individual user’s needs, thereby creating a more engaging and personally relevant learning environment. This can enhance users’ perception of their competence, resulting in more effective knowledge acquisition and application (H1).

Further, by promoting effective knowledge acquisition and application, the self-learning capability of ChatGPT can significantly impact individuals positively (H2, H3). That is, students can use the knowledge gained from interactions with ChatGPT to improve their academic performance, deepen their understanding of various subjects, and even stimulate their creativity. This aligns with the SDT’s emphasis on competence perception as a driver of enhanced performance and creativity.

Therefore, in line with the SDT, this study hypothesizes that the self-learning capability of ChatGPT, which promotes intrinsic motivation and perceived competence, can lead to improved knowledge acquisition and application, and consequently, a positive individual impact. This theoretical foundation helps to validate the first three hypotheses of the study and underscores the significance of intrinsic motivation and perceived competence in leveraging the educational potential of AI tools like ChatGPT.

Self-learning

Self-learning is considered as an inherent capability of ChatGPT, the AI tool (Rijsdijk et al., 2007). This unique ability of ChatGPT allows it to conduct continuous learning, improve itself, and solve problems based on big data analysis. The concept of self-learning in AI involves the system’s ability to learn and improve from experience without being explicitly programmed (Bishop & Nasrabadi, 2006). Such an AI-based tool, like ChatGPT, can evolve its capabilities over time, making it a highly efficient tool for knowledge acquisition and application among students (Berland et al., 2014). Berland et al. (2014) pointed out that self-learning in AI can provide personalized learning experiences, thereby helping students to acquire and retain knowledge more effectively. This is due to the AI’s ability to adapt its responses based on past interactions and data, allowing for more targeted and relevant knowledge acquisition. For knowledge application, the self-learning capability of AI tools like ChatGPT can play a significant role. D’mello and Graesser (2013) argued that such AI systems could enhance learners’ ability to apply acquired knowledge, as they can simulate a variety of scenarios and provide immediate feedback. This real-time, contextual problem-solving can further strengthen knowledge application skills in students. Consequently, recognizing the potential of self-learning in AI, such as in ChatGPT, to improve both knowledge acquisition and application among university students, this study suggests the following hypotheses.

H1a. Self-learning has a positive effect on knowledge acquisition.

H1b. Self-learning has a positive effect on knowledge application.

Knowledge Acquisition

Knowledge acquisition is the process in which new information is obtained and subsequently expanded upon when additional insights are gained. A research by Nonaka (1994) emphasized the role of knowledge acquisition in enhancing organizational productivity. Another study by Alavi and Leidner (2001) suggested that knowledge acquisition can positively influence individual performance by improving the efficiency and effectiveness of task execution. Several scholars have shown the significance of knowledge acquisition on perceived usefulness in the context of learning (Al-Emran et al., 2018; Al-Emran & Teo, 2020). Given the capabilities of AI chatbots like ChatGPT in providing users with immediate, accurate, and personalized information, it’s likely that knowledge acquisition from using such a tool could lead to improved task performance or individual impact. Therefore, this study proposes the following hypothesis:

H2. Knowledge acquisition has a positive effect on individual impact.

Knowledge application

Knowledge application, as defined by Al-Emran et al. (2020), implies the facilitation of individuals to effortlessly utilize knowledge techniques via efficient storage and retrieval systems. With AI-driven chatbots like ChatGPT, this application process can be significantly enhanced. The positive influence of effective knowledge application on individual outcomes has also been corroborated by previous research (Al-Emran & Teo, 2020; Alavi & Leidner, 2001; Heisig, 2009). Therefore, this study suggests the following hypothesis:

H3. Knowledge application has a positive effect on individual impact.

Personalization

The study’s conceptual framework is further informed by personalization (Maghsudi et al., 2021; Yu et al., 2017), underscoring the importance of personalized experiences in a learning environment. Literature on personalization advocates that designing experiences tailored to individual needs and preferences enhances the user’s engagement and satisfaction (Desaid, 2020; Jo, 2022). In the context of AI, personalization refers to the ability of the system, in this case, ChatGPT, to adapt its interactions based on the unique requirements and patterns of the individual user. This capacity to customize interactions could make the learning process more relevant and stimulating for the students, thereby potentially increasing the novelty value they derive from using ChatGPT (H4a).

Furthermore, the personalization theory also suggests that a personalized learning environment can lead to increased perceived benefits, as it allows students to learn at their own pace, focus on areas of interest or difficulty, and receive feedback that is specific to their learning progress (H4b). This aligns with the results of the study, which found a significant positive relationship between personalization and perceived benefits.

Personalization

Personalization refers to the technology’s ability to tailor interactions based on the user’s preferences, behaviors, and individual characteristics, providing a unique and individualized user experience (Wirtz et al., 2018). This capacity has been found to significantly enhance users’ perception of value in their interactions with the technology (Chen et al., 2022). Previous research has indicated that the personalization capabilities of AI chatbots, such as ChatGPT, can enhance this novelty value, as the individualized user experience provides a unique and fresh engagement every time (Haleem et al., 2022; Koubaa et al., 2023). Research has highlighted that personalized interactions with AI chatbots can lead to increased perceived benefits, such as improved efficiency, productivity, and task performance (Makice, 2009). Personalization enables the technology to cater to the individual user’s needs effectively, thereby enhancing their perception of the benefits derived from using it (Wirtz et al., 2018). Thus, this study proposes the following hypotheses:

H4a. Personalization has a positive effect on novelty value.

H4b. Personalization has a positive effect on benefits.

Diffusion of Innovation

The diffusion of innovations theory offers a valuable lens through which to understand the adoption of new technologies (Rogers, 2010). The theory suggests that the diffusion and adoption of an innovation in a social system are influenced by five key factors: the innovation’s relative advantage, compatibility, complexity, trialability, and observability.

One essential aspect of the innovation, which resonates strongly with this study, is its relative advantage – the degree to which an innovation is perceived as being better than the idea it supersedes. This relative advantage often takes the form of novelty value, especially in the early stages of an innovation’s diffusion process. In this context, ChatGPT, with its self-learning capability, personalization, and interactive conversation style, can be seen as a novel approach to traditional learning methods. This novelty can make the learning process more engaging, exciting, and effective, thereby increasing the perceived benefits for students.

Rogers’ theory also highlights that these perceived benefits can significantly influence an individual’s intention to adopt the innovation. The more an individual perceives the benefits of the innovation to outweigh the costs, the more likely they are to adopt it. In the context of this study, the novelty value of using ChatGPT contributes to the perceived benefits (H5), which in turn influences the intention to use it (H7). This underscores the importance of managing the perceived novelty value of AI tools like ChatGPT in a learning environment, as it can be a crucial determinant of their adoption and use. Furthermore, Rogers’ theory stresses the importance of compatibility and simplicity of the innovation. These attributes align with the concepts of personalization and self-learning capabilities of ChatGPT, highlighting that an AI tool that aligns with users’ needs and is easy to use is more likely to be adopted.

Novelty value

Novelty value refers to the unique and new experience that users perceive when interacting with the technology (Moussawi et al., 2021). This sense of novelty has been associated with increased interest, engagement, and satisfaction, contributing to a more valuable and enriching user experience (Hund et al., 2021). In this context, the novelty value provided by AI artifacts can enhance the perceived value of using the technology (Jo, 2022). This relationship has been documented in prior research, showing that the novelty of technology use can lead to increased perceived benefits, possibly due to heightened user interest, engagement, and satisfaction (Huang & Benyoucef, 2013). Therefore, this study suggests the following hypothesis:

H5. Novelty value has a positive effect on benefits.

Technology Acceptance Model (TAM)

The TAM has been widely employed to explain user acceptance and usage of technology. TAM posits that two particular perceptions, perceived usefulness (the degree to which a person believes that using a particular system would enhance their job performance) and perceived ease of use (the degree to which a person believes that using a particular system would be free of effort), determine an individual’s attitude toward using the system, which in turn influences their behavioral intention to use it, and ultimately their actual system use.

In the context of this study, TAM offers valuable insight into how individual impact, perceived as the influence of using ChatGPT on a student’s academic performance and outcomes, can affect perceived benefits and behavioral intention (H6a, H6b). Students are more likely to use ChatGPT if they perceive it to have a positive impact on their learning outcomes. This perceived impact can enhance the perceived benefits of using ChatGPT, which can then influence their behavioral intention to use it. This aligns with Davis’s proposition that perceived usefulness positively influences behavioral intention to use a technology (Davis, 1989).

Moreover, the relationship between perceived benefits and behavioral intention (H7) is also consistent with TAM. According to Davis (1989), when users perceive a system as beneficial, they are more likely to form positive intentions to use it. Thus, the more students perceive the benefits of using ChatGPT, such as improved learning outcomes, personalized learning experiences, and increased engagement, the stronger their intention to use ChatGPT.

Individual impact

Individual impact refers to the perceived improvements in task performance and productivity brought about by the use of an IT such as ChatGPT (Aparicio et al., 2017). The perceived usefulness, which is closely related to individual impact, has a positive effect on behavioral intention to use a technology (Davis, 1989). In the literature, numerous studies have demonstrated that when users perceive a technology to be beneficial and impactful on their personal efficiency or productivity, they are more likely to have a positive behavioral intention towards using it (Gatzioufa & Saprikis, 2022; Kelly et al., 2022; Venkatesh et al., 2012). Similarly, these perceived improvements or benefits can also increase the perceived value of the technology, contributing to its overall appeal (Kim & Benbasat, 2006). Therefore, building upon this premise, this study proposes the following hypotheses.

H6a. Individual impact has a positive effect on benefits.

H6b. Individual impact has a positive effect on behavioral intention.

Benefits

The concept of benefits is the perceived advantage or gain a user experiences from the use of the IT (Al-Fraihat et al., 2020). This study employed the individual impact and benefits separately. The rationale for considering individual impact and benefits as separate constructs in this research stems from the subtle differences in their underlying meanings and implications in the context of using AI chatbots like ChatGPT. While they might appear closely related, treating them separately can provide a more nuanced understanding of user experiences and perceptions. Individual impact, as defined in this research, pertains to the direct effects of using ChatGPT on the user’s performance and productivity. It encapsulates the tangible, immediate changes that occur in a user’s work efficiency and task completion speed as a result of using the AI chatbot. Therefore, individual impact serves as a measure of performance enhancement, indicating the ‘output’ side of the user’s interaction with ChatGPT. On the other hand, benefits, as gauged by the proposed survey items, are more encompassing. They extend beyond the immediate task-related effects to include the overall advantages perceived by the users. This could range from acquiring new knowledge to achieving personal or educational goals. Benefits, in essence, capture the ‘outcome’ aspect of using ChatGPT, reflecting a broader range of positive effects that contribute to user satisfaction and perceived value. By differentiating between individual impact and benefits, this research can provide a more detailed and comprehensive analysis of users’ experiences with ChatGPT.

The benefit as a determinant of behavioral intention is firmly established within the extant literature on technology acceptance and adoption. the theory of reasoned action (TRA) posits that the perception of benefits or value is directly linked to behavioral intentions (Fishbein & Ajzen, 1975). Similarly, the TAM proposes that perceived usefulness significantly influences behavioral intention to use a technology (Davis, 1989). Recent studies further confirm this relationship, which highlighted that the perceived benefit of a technology strongly predicts users’ intentions to use it (Cao et al., 2021; Chao, 2019; Yang & Wibowo, 2022). Given the consistent findings supporting this relationship, the following hypothesis is proposed:

H7. Benefits has a positive effect on behavioral intention.

Privacy theory and Technostress

The privacy paradox theory, formulated by Barnes (2006), provides insight into the intriguing contradiction that exists between individuals’ expressed concerns about privacy and their actual online behavior. Despite voicing concerns about their privacy, many individuals continue to disclose personal information or engage in activities that may compromise their privacy. However, in the context of AI technologies like ChatGPT, this study posits that privacy concerns may have a more pronounced impact. Particularly in an educational context, where sensitive academic information may be shared, privacy concerns can act as a deterrent, negatively influencing the behavioral intention to use the technology (H8).

On the other hand, the technostress model by Tarafdar et al. (2007) further illuminates our understanding of technology-induced stress and its impact on behavioral intention. This model proposes that individuals can experience different forms of technostress, including technophobia, which refers to the fear or anxiety experienced by some individuals when faced with new technologies. Technophobia can be particularly prevalent among individuals who lack familiarity or comfort with emerging technologies. In the context of this study, technophobia can potentially inhibit students from adopting and using AI tools like ChatGPT, thus negatively impacting their behavioral intention (H9).

Together, the privacy paradox theory and the technostress model provide a theoretical basis for understanding the potential inhibitors that could affect university students’ behavioral intention to use ChatGPT. By considering these theories, this study offers a more comprehensive perspective on the factors that can influence the adoption and use of AI technologies in an educational setting.

Privacy concerns

The concept of privacy concern refers to an individual’s anxiety or worry about the potential misuse or improper handling of personal data (Barth & de Jong, 2017). Privacy concern has been widely studied in the context of technology use, with a substantial body of research suggesting it is a major determinant of user behavior and intention (de Cosmo et al., 2021; Lutz & Tamò-Larrieux, 2021; Zhu et al., 2022). In the era of big data and pervasive digital technology, privacy concerns have increasingly become a pivotal factor shaping user behaviors (Smith et al., 2011). A host of empirical studies suggest that privacy concerns have a negative impact on behavioral intention. For example, Dinev and Hart (2006) observed that online privacy concerns negatively influence intention to use Internet services. Similarly, Li (2012) found that privacy concerns negatively affect users’ intention to adopt mobile apps. In the context of AI chatbots like ChatGPT, this concern could be even more pronounced, given the bot’s capacity for self-learning and data processing. Therefore, following the pattern observed in previous research, this study suggests the following hypothesis:

H8. Privacy concern has a negative effect on behavioral intention.

Technophobia

Technophobia is typically described as a personal fear or anxiety towards interacting with advanced technology, including AI systems such as ChatGPT (Ivanov & Webster, 2017). People suffering from technophobia often exhibit avoidance behavior when it comes to using new technology (Khasawneh, 2022). Several studies have empirically supported the negative relationship between technophobia and behavioral intention. For instance, Marakas et al. (1998) demonstrated that technophobia significantly reduces users’ behavioral intention to use a new IT system. Further, Selwyn (2018) found that technophobia can lead to avoidance behaviors and resistance towards adopting and using new technology, thus negatively influencing behavioral intention. Some users may fear losing control to these systems or fear making mistakes while using them, leading to a decline in their intention to use AI systems like ChatGPT. In consideration of these factors and in line with previous studies, this study posits the following hypothesis:

H9. Technophobia has a negative effect on behavioral intention.

Affect theory

The affect theory, proposed by Lazarus (1991), offers a comprehensive framework for understanding how emotions influence behavior, including technology use. According to this theory, emotional responses are elicited by the cognitive appraisal of a given situation and these emotional responses, in turn, have a significant impact on individuals’ behavior.

Applying this theory to the context of this study, it can be suggested that the use of AI technologies like ChatGPT can elicit a range of emotional responses among students. One particular emotion that is of interest in this study is guilt feeling. Guilt is typically experienced when individuals perceive that they have violated a moral standard. In the case of using AI technologies for learning, some students might perceive this as a form of ‘cheating’ or unfair advantage, thus leading to feelings of guilt.

This study hypothesizes that these guilt feelings can negatively influence students’ behavioral intention to use ChatGPT (H10). That is, students who experience guilt feelings related to using ChatGPT might be less inclined to use this tool for their learning. This underscores the importance of considering emotional factors, in addition to cognitive and behavioral factors, when exploring the determinants of technology use in an educational setting.

By integrating affect theory into the analysis, this study contributes to a more holistic understanding of the factors influencing the adoption and use of AI technologies in education. It also highlights the need for strategies that address potential guilt feelings among students to promote more positive attitudes and intentions towards using these technologies for learning.

Guilt feeling

Guilt feeling as a psychological state has been discussed extensively in consumer behavior research, often in the context of regret after certain consumption decisions (Khan et al., 2005; Zeelenberg & Pieters, 2007). Recently, guilt feeling has been associated with technology use, particularly when users perceive their technology use as excessive or inappropriate (Masood et al., 2022). For instance, guilt feeling could emerge among students when they feel they excessively rely on AI tools, such as ChatGPT, for completing assignments instead of their own effort and intellectual work. In line with the negative state relief model (Cialdini et al., 1973), individuals experiencing guilt feelings tend to take actions to alleviate these feelings. If university students feel guilty for using ChatGPT in their assignments, they might reduce their future use of this tool, thereby negatively impacting their behavioral intention to use ChatGPT. This finding is consistent with the results of a study conducted by Masood et al. (2022), which found that feelings of guilt resulting from excessive use of social networking sites predicted discontinuation. Thus, in the light of previous research suggesting a potential link between guilt feelings and behavioral intentions related to technology use, this study proposes the following hypothesis:

H10. Guilt feeling has a negative effect on behavioral intention.

Innovativeness

Lastly, the diffusion of innovation theory (Rogers, 2010) lends further support to the hypotheses within the model (H12a, H12b), positing a positive influence of innovativeness on both behavioral intention and behavior. Innovativeness is defined as an individual’s predisposition to adopt new ideas, processes, or products earlier than other members of a social system (Rogers, 1983). It plays a critical role in technology adoption research, with prior studies highlighting the positive effects of innovativeness on both behavioral intention and actual behavior. For instance, Agarwal and Prasad (1998) found that personal innovativeness in the domain of information technology significantly influenced individuals’ intention to use a new technology. Similarly, Varma Citrin et al. (2000) discovered that consumer innovativeness positively affected both intention to use and actual use of self-service technologies. In the context of AI chatbots like ChatGPT, it is reasonable to propose that innovative university students, open to trying new technologies and applications, would exhibit stronger intentions to use ChatGPT and indeed, more likely to use it in practice. Hence, this study advances the following hypotheses:

H11a. Innovativeness has a positive effect on behavioral intention.

H11b. Innovativeness has a positive effect on behavior.

Theory of planned behavior (TPB)

The TPB, as proposed by Ajzen (1991), is a widely recognized psychological framework for understanding human behavior in various contexts, including technology adoption (Huang, 2023; Meng & Choi, 2019; Song & Jo, 2023). At the heart of TPB is the principle that individuals’ behavioral intentions are the most immediate and critical determinant of their actual behavior. This principle is predicated on the assumption that individuals are rational actors who make systematic use of the information available to them when deciding their course of action.

In the context of this study, the TPB supports the hypothesis that behavioral intention, influenced by various enabling and inhibiting factors, can significantly predict the actual use of ChatGPT among university students (H11). That is, students who have a stronger intention to use ChatGPT, either due to perceived benefits or other positive factors, are more likely to engage in actual usage behavior. Conversely, those with weaker intentions, perhaps due to privacy concerns or feelings of guilt, are less likely to use ChatGPT.

This hypothesis, grounded in TPB, is instrumental in linking the various enabling and inhibiting factors to the actual use of ChatGPT. It helps to consolidate the theoretical framework of the study, enabling a more holistic understanding of the behavioral dynamics at play. Moreover, it underscores the importance of cultivating positive behavioral intentions among students to encourage the actual use of AI technologies in education.

By integrating the TPB into the analysis, this study not only aligns with established psychological theories of behavior but also expands the application of these theories in the context of AI technology adoption in education.

Behavioral intention

The relationship between behavioral intention and actual behavior is a fundamental principle in various behavior prediction models, most notably the TPB by Ajzen (1991). The TPB posits that behavioral intention is the most proximal determinant of actual behavior. The assumption is that individuals act in accordance with their intentions, given that they have sufficient control over the behavior. Empirical studies have provided substantial evidence to support this link (Gatzioufa & Saprikis, 2022; Sun & Jeyaraj, 2013). In the context of ChatGPT usage among university students, it is therefore expected that higher intentions to use ChatGPT would translate into actual usage behavior. Therefore, drawing on existing literature and theoretical models, this study suggests the following hypothesis:

H12. Behavioral intention has a positive effect on behavior.

Methodology

Instrument

The instrument for this study was carefully crafted, leveraging a structured questionnaire to assess the influential factors in university students’ behavioral intentions and actual use of ChatGPT. Items in the questionnaire were adopted and adapted from the existing literature, ensuring their validity and relevance in examining the constructs of interest. Each item in the questionnaire was evaluated using a seven-point Likert scale, with 1 representing ‘strongly disagree’ and 7 denoting ‘strongly agree’. This provided a nuanced measurement of the participants’ perceptions and intentions. The survey questions were meticulously designed based on established theories and previous studies in the field, ensuring relevance to our research objectives.

Table A1 outlines a comprehensive list of constructs and their associated items used in the study, detailing how each construct is measured through specific statements. The constructs include self-learning, knowledge acquisition, knowledge application, personalization, novelty value, individual impact, benefits, privacy concern, technophobia, guilt feeling, innovativeness, behavioral intention, and behavior. Each item is attributed to sources from recent research, evidencing the rigorous grounding of these measures in existing literature. For instance, self-learning items are drawn from Chen et al. (2022), indicating the AI’s capability to enhance its functions through learning. Knowledge acquisition and application items reflect the user’s ability to gain and use knowledge via ChatGPT, sourced from Al-Emran et al. (2018) and Al-Sharafi et al. (2022), respectively. Personalization items, derived from Chen et al. (2022), measure ChatGPT’s ability to tailor responses to individual users. The novelty value construct, sourced from Hasan et al. (2021), captures the unique experience of using ChatGPT. Individual impact and benefits items, referenced from various authors, assess the practical advantages of using ChatGPT in educational settings. Privacy concern and technophobia items address potential user apprehensions, while guilt feeling items, a novel addition from Masood et al. (2022), explore emotional reactions to using AI in education. Innovativeness items, cited from Agarwal and Prasad (1998), examine user openness to new technologies. Behavioral intention and behavior constructs, finally, measure users’ future use intentions and current use patterns, demonstrating the study’s comprehensive approach to understanding AI chatbot utilization among university students.

The survey items underwent a rigorous validation process involving academic experts and a pilot study with a subset of the target population to refine their clarity and applicability. Prior to the actual distribution of the survey, a pretest was carried out with a select group of students to ensure the clarity and comprehensibility of the items. Feedback from this pretest was taken into account, and minor revisions were made to enhance the clarity of certain questions. The refined questionnaire was then disseminated to a broader group of university students, with the collected responses forming the primary data for this study. These responses were meticulously analyzed to understand the role of various factors on students’ behavioral intention and their actual usage of ChatGPT.

Questionnaire Design and Data Collection

The questionnaire was structured in two sections. The first section collected demographic information about the respondents, including their age, gender, and field of study, to capture the heterogeneity of the sample. The second section was dedicated to evaluating the constructs related to the study, each measured using multiple items.

Once the final version of the questionnaire was prepared, it was disseminated among university students. The data for this study was collected via a Google survey form. To reach a broad range of university students, the survey was disseminated through multiple channels. First, it was sent out to a network of professors at several universities. These professors then distributed the survey form to their students. During the survey phase, we explained the purpose of our study to professors across the country, asking for their assistance in distributing the survey if their students were actively using ChatGPT and were willing to participate. Additionally, some professors further shared the survey with their professional networks, expanding our reach. This approach was selected to ensure a diverse and representative sample of students from various disciplines and academic levels. Approximately 400 students were initially approached through this network. This method was chosen over others to leverage the existing academic networks, ensuring a broad yet relevant participant base for our study, reflecting diverse experiences with ChatGPT across different universities. In addition to professors, the survey was also shared within various university communities, such as student groups and clubs. This allowed the study to reach students who might not have been reached through the professors’ networks. Finally, the survey was distributed within ChatGPT community groups. This was especially important as it enabled the study to capture the perspectives of students who were already familiar with or using ChatGPT, thereby enriching the study’s insights into the factors affecting students’ behavioral intentions towards using AI tools like ChatGPT. Responses were collected over a period of several weeks, with a total of 273 responses received. The sample size of this study, 273, is considered adequate and appropriate given the number of latent variables and observation variables in the model. The literature suggests that a higher sample size is always better in SEM-based studies due to its effect on statistical power (Hair et al., 2021). In this case, the ratio of sample size to the number of latent variables (approximately 21:1) is within acceptable limits. Moreover, the sample size also exceeds the minimum thresholds suggested by other guidelines. For instance, Hair et al. (2021) suggested a minimum sample size of 10 times the number of items in the scale with the most items. Considering that the scale with the most items in this study contains 3 items, the sample size of 273 is well above the minimum threshold. The data, then, were analyzed.

Table 1 presents sample details. The gender split among respondents was nearly even, with 123 males (45.1%) and 150 females (54.9%), ensuring diverse gender perspectives. Age-wise, respondents ranged from under 20 to over 23 years. The largest groups were those under 20 (42.9%) and over 23 (37.4%), with 21- and 22-year-olds constituting 9.2% and 10.6%, respectively. Most respondents (89.4%) were undergraduates, with postgraduates making up 10.6%, reflecting the primary academic users of AI tools like ChatGPT. In terms of study majors, Business (44.3%) was predominant, followed by Humanities and Social Sciences (22.7%), and Science (22.0%). Arts, Sports, and Military majors each represented 5.5%. The analysis of descriptive statistics was conducted using the pivot table feature in Excel.

Results

The theoretical framework for this study was evaluated utilizing the Partial Least Squares (PLS) approach via SmartPLS 4 software. The PLS method is a prevalent choice within the Information Systems/Information Technology domain, as substantiated by (Hair et al., 2014). One of the principal benefits of PLS lies in its fewer constraints concerning sample size distribution and residuals relative to covariance-based structural equation techniques such as LISREL and AMOS, as highlighted by (Hair et al., 2021). Our analysis employed a three-step strategy, encompassing common method bias (CMB), the measurement model, and the structural model.

Common Method Bias (CMB)

CMB refers to the spurious effect that can occur when both predictor and criterion variables are collected from the same source at the same time. It can potentially inflate or deflate the observed relationships between the constructs, leading to biased results. Thus, addressing the issue of CMB is critical to ensuring the validity of the study’s findings. In this study, we took several procedural and statistical steps to address the potential issue of CMB. Procedurally, we ensured the anonymity of the respondents and the confidentiality of their responses. We also used clear and simple language in the survey to minimize any ambiguity in the questions.

Statistically, we employed the Harman’s single factor test, which is a commonly used method to detect CMB (Podsakoff et al., 2003). The basic premise of this test is that if a single factor emerges from a factor analysis of all the items in the questionnaire or one general factor accounts for the majority of the covariance among the measures, then common method variance is a problem. In our case, the first of included factors explained 33.88% of the total variance, suggesting that CMB is not a substantial concern in this study. Additionally, we used the marker variable technique, which involves selecting a variable that is theoretically unrelated to other variables in the study and checking for its correlation with them. If significant correlations exist, they might be due to common method variance. Our marker variable showed negligible correlations with the study variables, further indicating that CMB is unlikely to have significantly influenced our results. In conclusion, both procedural and statistical checks suggest that CMB is not a major issue in this study, and the relationships identified between the constructs can be interpreted with confidence.

Measurement model

The measurement model in this study was assessed by examining the reliability, convergent validity, and discriminant validity of the constructs. Reliability was assessed using composite reliability (CR) and Cronbach’s alpha (CA). The values for both CR and CA should exceed the threshold of 0.7, indicating acceptable reliability. In our study, all constructs exceeded this threshold, demonstrating good reliability. Convergent Validity is the extent to which a measure correlates positively with alternate measures of the same construct. It was assessed using the average variance extracted (AVE) and the factor loadings of the indicators. All AVE values should be greater than 0.5, and all factor loadings should be significant and exceed 0.7. In our study, all constructs demonstrated satisfactory convergent validity as they met these criteria. Table 2 describes the test results of reliability and validity.

Discriminant validity refers to the extent to which a construct is truly distinct from other constructs. This was examined using the Fornell-Larcker criterion and the heterotrait-monotrait ratio (HTMT). According to the Fornell-Larcker criterion, the square root of the AVE of each construct should be greater than its highest correlation with any other construct. Table 3 shows the results of Fornell-Larcker criterion.

Table 4 displays the HTMT matrix. Notably, knowledge acquisition and knowledge application showed a 0.954 HTMT value, above the 0.85 norm. Despite exceeding the threshold, these values suggest a moderate overlap. Although related, the constructs are distinct: knowledge acquisition involves generating new knowledge, while knowledge application focuses on immediate knowledge integration. Given their different roles in AI-assisted learning, and supported by literature allowing higher HTMT values within the same network, both constructs are retained in our study. Future research should further investigate their differentiation.

Structural model

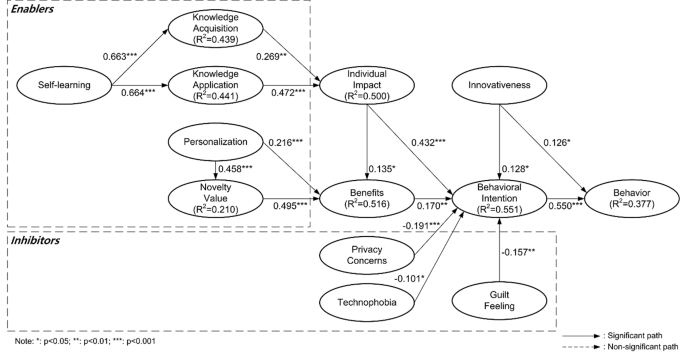

A Structural Equation Modeling (SEM) analysis was carried out to scrutinize the hypothesized interconnections among the constructs using Partial Least Squares (PLS). For hypothesis testing and path coefficient determination, this study employed a bootstrapping procedure, setting the subsample size at 5000. Overall, the structural model describes approximately 38.0% of the variability in behavior. Figure 2; Table 5 details of the significance test results of SEM.

Discussion

The results of this study confirmed a positive correlation between self-learning of ChatGPT and knowledge acquisition and application, consistent with prior research on AI-driven learning tools (H1a, H1b) (Jarrahi et al., 2023). The results mean that as students engage with ChatGPT, they acquire new knowledge, which is then processed and incorporated into their existing knowledge base. Also, interacting with ChatGPT not only helps students gain new knowledge but also aids them in applying this knowledge in various scenarios, consequently resulting in a higher individual impact. The ability of AI to leverage vast data and improve problem-solving provides a fertile ground for enhanced learning. Yet, the novelty of this finding in the context of AI like ChatGPT calls for more studies to solidify this understanding.

Additionally, the results revealed that knowledge acquisition and application significantly influenced individual impact (H2, H3), mirroring the findings of Arpaci et al., (2020), Al-Emran et al. (2018), Al-Emran and Teo (2020), and Alavi and Leidner (2001). The findings suggest that as learners more actively gain knowledge from ChatGPT, they experience improvements in their productivity, task completion speed, and overall performance. Further, as learners effectively utilize the knowledge acquired from ChatGPT, they can better achieve their goals and enhance their work capabilities. This reinforces the importance of AI tools in achieving task completion and boosting productivity. Nevertheless, the variance in individual impact explained by these two factors indicates that other elements may also be at play.

Personalization was found to significantly correlate with novelty value and benefits (H4a, H4b), supporting Kapoor et al.‘s assertion of personalized experiences driving perceived value (Kapoor & Vij, 2018). As well the results are keeping with the observations in previous works (Haleem et al., 2022; Koubaa et al., 2023). The analysis suggests that the tailored experience delivered by ChatGPT is perceived as novel by the users. As users find these tailored experiences new and intriguing, the novelty value of the AI tool is enhanced. Similarly, as the AI tool continuously learns and adapts to individual users’ needs, it helps learners achieve their learning objectives more effectively and efficiently. This aligns with the perception of increased benefits, as users recognize the tool’s contribution to their learning outcomes. However, the degree of this impact varies, suggesting that personalization’s impact may be contingent on other factors that need exploration.

The positive effect of novelty value on perceived benefits (H5) found in this study aligns with diffusion theory (Rogers, 2010) and past study on novelty of AI (Jo, 2022). The unique and different learning experience that ChatGPT offers might stimulate users’ curiosity and interest in learning. It could make the learning process more enjoyable, keeping users engaged and motivated, which, in turn, enhances their learning outcomes and the perceived benefits from using the chatbot. Yet, the relative strength of this relationship indicates that novelty may not be the only driver of perceived benefits.

Regarding the relationship between individual impact and benefits, and behavioral intention (H6a, H6b), our findings are in line with the TAM (Davis, 1989), further solidifying its relevance in the AI context. These findings imply that when users perceive a higher individual impact from using ChatGPT (i.e., improved efficiency, productivity, or task accomplishment), they also perceive more benefits and express stronger intentions to continue using it. Moreover, the immediate positive effects of using ChatGPT, such as accomplishing tasks more quickly or increasing productivity, directly contribute to users’ willingness to continue using it. Yet, the relative effect sizes suggest that while individual impact affects both outcomes, its influence is stronger on behavioral intention, a facet worthy of further examination.

In line with prior studies (de Cosmo et al., 2021; Lutz & Tamò-Larrieux, 2021; Zhu et al., 2022), privacy concern was negatively associated with behavioral intention (H8). The results coincide with the privacy calculus theory (Dienlin & Metzger, 2016; Dinev & Hart, 2006), which suggests that when privacy concerns outweigh the perceived benefits, it can deter future usage intention. In congruent with previous findings (Marakas et al., 1998; Selwyn, 2013), this study found technophobia negatively impacting behavioral intention (H9), adding a novel dimension to our understanding of AI acceptance. This result highlights the need for easy-to-use, user-friendly interfaces and perhaps educational programs to mitigate fear and promote user confidence when engaging with AI chatbots. Similarly, the guilt feeling affecting behavioral intention (H10) provides a new angle for exploration in AI usage context.

Lastly, the results confirmed the strong effect of behavioral intention on actual behavior (H11), as suggested by (Ajzen, 1991). Also, innovativeness positively affected behavioral intention and behavior (H12a, H12b), adding to the growing literature on innovation adoption (Rogers, 2010). In the context of using AI chatbots, such as ChatGPT, students who are more innovative (who have higher levels of innovativeness) demonstrated a stronger intention to use and an actual higher usage of the chatbot. This could be interpreted to mean that these students are more inclined to explore and make use of new technologies in their learning processes, which in turn influences their behavior in a positive manner. Furthermore, the positive correlation between innovativeness and behavioral intention may also be linked to the tendency of innovative individuals to perceive less risk in trying out new technologies. This lack of perceived risk, coupled with their natural proclivity towards novelty, may increase their intention to utilize AI chatbots. However, the precise mechanisms through which innovativeness operates in the AI context warrant further study.

Conclusion

Theoretical contributions

The current study, in its quest to uncover the relationships between AI chatbot variables and their effects on university students, has made several valuable theoretical contributions to the existing body of literature on AI and education. Prior to this research, much of the literature focused on the use of AI chatbots in commercial and customer service settings, leaving the educational context relatively unexplored (Ashfaq et al., 2020; Chung et al., 2020; Pillai & Sivathanu, 2020). This study has filled this gap by focusing specifically on university students and their interaction with AI chatbots, thereby providing new insights and extending the knowledge boundary of the AI field to encompass the educational sector.

Additionally, this study’s emphasis on the self-learning capabilities of ChatGPT as a significant determinant of knowledge acquisition and application among students is a significant contribution to the existing body of literature. Previous research primarily centered on the chatbot’s features and functionalities (Haleem et al., 2022; Hocutt et al., 2022), leaving the learning capabilities of these AI systems underexplored. By focusing on the self-learning feature of ChatGPT, this study has expanded the discourse on AI capabilities and their impact on knowledge dissemination in the educational context.

This research significantly contributes to the understanding of innovativeness’s impact on behavioral intention and behavior in AI chatbot usage. While prior studies focused mainly on business contexts (BARIŞ, 2020; Heo & Lee, 2018; Selamat & Windasari, 2021), applying these insights to AI chatbots is relatively new, marking this study as a pioneer. Innovativeness as a determinant in this realm is novel for several reasons. First, it shifts focus from technology attributes to user characteristics, highlighting individual differences in technology adoption often overshadowed by technocentric views. Second, our study provides empirical evidence that innovativeness positively influences both behavioral intention and behavior, challenging previous assertions that limited its influence to early adoption stages (Agarwal & Prasad, 1998). By applying innovativeness in an AI chatbot context, with its unique interactional dynamics, the research broadens the theoretical construct of innovativeness, emphasizing the relevance of individual traits in emerging technology usage. Furthermore, this study’s integration of innovativeness into the research model encourages future research to consider other personal traits affecting technology usage, promoting a more comprehensive, user-centric approach to technology adoption. Hence, exploring innovativeness in AI chatbot usage offers significant theoretical insights and opens avenues for future research in technology usage behaviors.

This research makes a notable theoretical contribution by exploring the negative effects of privacy concern, technophobia, and guilt feeling on behavioral intention towards AI chatbot use, areas often overlooked in favor of positive influences (Ashfaq et al. 2020; Huang, 2021; Nguyen et al., 2021; Rafiq et al. 2022). Privacy concern, consistent with prior studies (Dinev & Hart, 2006), is critically examined in AI chatbots, where data privacy is paramount due to the potential for extensive personal data sharing. This study extends understanding by focusing on AI chatbots, a context where privacy concerns have heightened relevance. Technophobia’s impact, previously explored in technology adoption (Khasawneh, 2022; Kotze et al., 2016; Koul & Eydgahi, 2020), is uniquely applied to AI chatbots, shedding light on how fear and anxiety towards advanced technology can affect usage intentions. This perspective enriches existing knowledge by situating technophobia within the realm of cutting-edge AI technologies. Guilt feeling, a relatively unexplored concept in technology use, especially in AI chatbots, is also investigated. This study reveals how guilt, potentially stemming from AI reliance for learning or work, can deter usage intention, thus addressing a significant gap in technology adoption research.

This research notably contributes to theory by examining the effects of innovativeness on behavioral intention and behavior in AI chatbot usage, a relatively unexplored area, especially outside of business contexts (BARIŞ, 2020; Heo & Lee, 2018; Selamat & Windasari, 2021). This study stands out as one of the first to apply these concepts to AI chatbots, marking a novel approach in several ways. Firstly, it shifts the focus from technology attributes to user characteristics, emphasizing individual differences in technology adoption—a perspective often overshadowed by a technocentric focus. This approach diverges from traditional technology acceptance models, spotlighting the user’s innovativeness. Secondly, by demonstrating empirically that innovativeness positively affects both behavioral intention and behavior, this research underscores the dynamic role of this personal trait in driving technology usage. Furthermore, the study broadens the theoretical construct of innovativeness by applying it to the unique interactional dynamics of AI chatbots, thereby highlighting the relevance of individual traits in using emerging technologies. Lastly, integrating innovativeness into the research model sets a precedent for future studies to include other personal traits or user characteristics that may influence technology usage behavior. This approach promises a more holistic, user-centric understanding of technology adoption and use. Overall, exploring the impact of innovativeness in AI chatbot usage provides valuable theoretical insights and opens avenues for future research, enhancing our understanding of technology adoption in this rapidly evolving field.

The research significantly enhances theoretical understanding by substantiating the relationships between constructs like knowledge acquisition and application, individual impact, and benefits in the AI chatbot context. Previously, educational and organizational literature recognized the importance of knowledge acquisition and application for performance (Al-Emran et al., 2018; Al-Emran & Teo, 2020; Bhatt, 2001; Grant, 1996; Heisig, 2009). This study extends these principles to AI chatbot usage, underscoring their critical role in effective technology utilization. The study’s innovation lies in demonstrating how these cognitive processes interact with the individual impact construct. This interaction offers a comprehensive view of a user’s learning journey within the AI chatbot environment. Furthermore, the research goes beyond by illustrating that user-perceived benefits are influenced by both AI chatbot personalization and individual impact, the latter being a synergistic result of knowledge acquisition and application. This interplay provides a nuanced view of the factors contributing to perceived benefits, deepening our theoretical grasp of what renders AI chatbot usage beneficial. By presenting a complex, interconnected model of AI chatbot usage, this research contributes to a more thorough understanding of the dynamics involved. It encourages a multi-dimensional approach in examining AI chatbots’ adoption and usage factors. The findings also prompt further scholarly inquiry into how these relationships vary with context, AI chatbot type, or user characteristics. Thus, this study lays a foundation for future research, aiming to enrich the comprehension of AI chatbot usage dynamics.

Lastly, this study’s comprehensive research model, encompassing multiple constructs and their interrelationships, serves as a robust theoretical framework for future research in AI chatbot usage in educational settings. The findings of this study, highlighting the significance of various constructs and their relationships, provide a roadmap for scholars, guiding future research to better understand the dynamics of AI chatbot usage and its effects on students’ learning experiences. Furthermore, our research model takes a holistic approach by combining both positive and negative predictors of behavioral intention. This approach can offer a more comprehensive understanding of the intricate factors influencing AI chatbot use among university students.

Managerial implications

The findings of this study have numerous practical implications, particularly for educators, students, and developers of AI chatbot technologies.

Firstly, the results underscore the potential benefits of employing AI chatbots, like ChatGPT, as supplementary learning tools in educational settings. Given the significant positive effect of ChatGPT’s self-learning capabilities on knowledge acquisition and application among students, educators could consider integrating such AI chatbots into their teaching methods (Essel et al., 2022; Mohd Rahim et al., 2022; Vázquez-Cano et al., 2021). The integration of AI chatbots could take various forms, such as using chatbots to provide additional learning resources, facilitate interactive learning exercises, or offer personalized tutoring. Given the increasingly widespread use of online and blended learning methods in education (Crawford et al., 2020), the potential of AI chatbots to enhance students’ learning experiences should not be overlooked.

Secondly, this study’s findings may serve as a guide for students in maximizing their learning outcomes while using AI chatbots. Understanding that the self-learning capabilities of chatbots significantly enhance knowledge acquisition and application, students could be more inclined to utilize these tools for learning (Al-Sharafi et al., 2022). Furthermore, recognizing that personalization contributes positively to novelty value and benefits might motivate students to engage more deeply with personalized learning experiences offered by AI chatbots.

Thirdly, the present study’s results offer crucial insights into key features that users find valuable in an AI chatbot within an educational context. The demonstrated significance of self-learning capabilities, personalization, and novelty value indicates distinct areas that developers can focus on to amplify user experience and educational outcomes. Understanding the paramount importance of self-learning capabilities can shape the development strategies of AI chatbots. For instance, developers can focus on improving the ability of AI chatbots like ChatGPT to learn from user interactions, thereby enhancing the quality of responses over time. It would require advanced machine learning algorithms to grasp the context of user inquiries accurately, ensuring a consistent learning experience for the users. As noted by (Komiak & Benbasat, 2006), the AI’s ability to learn and adapt based on user input and data over time can lead to improved user satisfaction and ultimately, enhanced learning outcomes. Personalization is another pivotal feature that developers need to consider. This could involve customizing chatbot responses according to the user’s level of understanding, learning pace, and areas of interest. Personalization also extends to recognizing users’ specific learning goals and offering resources or guidance to help them achieve these goals. This feature can increase the value that users derive from their interactions with the chatbot (Jo, 2022). Lastly, the novelty value of AI chatbots in educational contexts cannot be underestimated. Developers can capitalize on this by introducing innovative features and interaction modes that keep users engaged and make learning exciting. Such features could include gamified learning activities, integration with other learning resources, and more interactive and responsive dialogue systems. It is well established in literature that perceived novelty can positively impact users’ attitudes and behaviors towards a new technology (Hasan et al., 2021; Jo, 2022).

Fourthly, the study’s findings related to the negative impact of privacy concerns, technophobia, and guilt feelings on behavioral intentions provide crucial insights for AI chatbot developers and educational institutions. Privacy concerns form a significant barrier to the acceptance of AI technologies in various fields, including education (Belen Saglam et al., 2021; Ischen et al., 2020; Manikonda et al., 2018). The concerns typically arise from the vast amounts of personal data AI systems gather, analyze, and store (Kokolakis, 2017). In response, developers can strengthen the privacy features of AI chatbots, clearly communicate their data handling practices to users, and ensure compliance with stringent data protection regulations. For instance, incorporating robust encryption methods, anonymization techniques, and allowing users to control the data they share can allay privacy concerns. Educational institutions can also provide guidance on safe and responsible use of AI technologies to further assuage these concerns. Technophobia, or the fear of technology, can also hinder the acceptance and use of AI chatbots. To address this, developers can design user-friendly interfaces, provide extensive user support, and gradually introduce advanced features to avoid overwhelming users. Moreover, educational institutions can play a vital role in mitigating technophobia by offering appropriate training and support to help students become comfortable with using these technologies. It has been suggested that familiarity and understanding significantly reduce technophobia (Khasawneh, 2018a; Xi et al., 2022). Guilt feeling, as revealed in this study, is another factor that negatively influences behavioral intention. This can occur when users feel guilty about relying heavily on AI chatbots for learning or assignments. To address this, educational institutions should foster an environment that encourages balanced use of AI tools. This could involve providing guidelines on ethical AI use, setting boundaries on AI tool usage in assignments, and integrating AI tools as supplemental rather than primary learning resources.

Finally, the significant impact of perceived benefits and individual impact on behavioral intentions underscores the importance of demonstrating the tangible benefits of AI chatbot use in education to users. Making these benefits clear to users could encourage greater adoption and more effective use of AI chatbots in educational settings.

Limitation and Future Research

While this study makes several important contributions to our understanding of AI chatbots’ use in an educational context, it also opens up avenues for further investigation. The study’s primary limitation is its focus on university students, which may limit the generalizability of the findings. Future studies could explore different demographics, such as younger students, adult learners, or professionals engaging in continuous education, to provide a broader understanding of AI chatbot utilization in diverse learning contexts. The scope of the research could also be extended to include various types of AI chatbot technologies. As AI continues to advance, chatbots are becoming more diverse in their capabilities and functionalities. Hence, research considering different kinds of AI chatbots, their specific features, and their effects on learning outcomes could provide further insight. Moreover, this study primarily focuses on individual impacts and user perceptions. Future research could also look into institutional perspectives and the macro-level impacts of AI chatbot adoption in education. For instance, studies could investigate how the implementation of AI chatbots affects teaching strategies, curriculum development, and institutional resource allocation. Lastly, it would be insightful to conduct longitudinal studies to understand the long-term effects of AI chatbot usage on student performance and attitudes towards AI technologies. This approach could reveal trends and impacts that may not be immediately apparent in cross-sectional studies.

Data availability

The data used in this study are available from the corresponding author upon reasonable request.

References

Agarwal, R., & Prasad, J. (1998). A conceptual and operational definition of personal innovativeness in the domain of Information Technology. Information Systems Research, 9(2), 204–215.

Ajzen, I. (1991). The theory of Planned Behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211. https://doi.org/10.1016/0749-5978(91)90020-T.

Al-Emran, M., & Teo, T. (2020). Do Knowledge Acquisition and Knowledge sharing really affect E-Learning adoption? An empirical study. Education and Information Technologies, 25(3), 1983–1998.

Al-Emran, M., Mezhuyev, V., & Kamaludin, A. (2018). Students’ perceptions towards the integration of knowledge management processes in M-Learning systems: A preliminary study. International Journal of Engineering Education, 34(2), 371–380.

Al-Emran, M., Arpaci, I., & Salloum, S. A. (2020). An empirical examination of continuous intention to Use M-Learning: An Integrated Model. Education and Information Technologies, 25(4), 2899–2918. https://doi.org/10.1007/s10639-019-10094-2.

Al-Fraihat, D., Joy, M., & Sinclair, J. (2020). Evaluating E-Learning systems Success: An empirical study. Computers in Human Behavior, 102, 67–86.

Al-Sharafi, M. A., Al-Emran, M., Iranmanesh, M., Al-Qaysi, N., Iahad, N. A., & Arpaci, I. (2022). Understanding the impact of Knowledge Management factors on the sustainable use of Ai-Based chatbots for Educational purposes using a Hybrid Sem-Ann Approach. Interactive Learning Environments, 1–20.

Alavi, M., & Leidner, D. E. (2001). Knowledge Management and Knowledge Management systems: Conceptual foundations and Research Issues. MIS Quarterly, 107–136.

Aljanabi, M. (2023). Chatgpt: Future directions and open possibilities. Mesopotamian Journal of Cybersecurity, 2023, 16–17.

Anders, B. A. (2023). Is using Chatgpt Cheating, Plagiarism, both, neither, or Forward thinking? Patterns, 4(3).

Aparicio, M., Bacao, F., & Oliveira, T. (2017). Grit in the path to E-Learning success. Computers in Human Behavior, 66, 388–399.

Ashfaq, M., Yun, J., Yu, S., & Loureiro, S. M. C. (2020). I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of Ai-Powered Service agents. Telematics and Informatics, 54, 101473.

BARIŞ, A. (2020). A New Business Marketing Tool: Chatbot. GSI Journals Serie B: Advancements in Business and Economics, 3(1), 31–46.

Barnes, S. B. (2006). A Privacy Paradox: Social Networking in the United States. first monday.

Barth, S., & de Jong, M. D. T. (2017). The privacy Paradox – investigating discrepancies between expressed privacy concerns and actual online behavior – a systematic literature review. Telematics and Informatics, 34(7), 1038–1058. https://doi.org/10.1016/j.tele.2017.04.013.

Belen Saglam, R., Nurse, J. R., & Hodges, D. (2021). Privacy Concerns in Chatbot Interactions: When to Trust and When to Worry. HCI International 2021-Posters: 23rd HCI International Conference, HCII 2021, Virtual Event, July 24–29, 2021, Proceedings, Part II 23.

Berland, M., Baker, R. S., & Blikstein, P. (2014). Educational Data Mining and Learning analytics: Applications to Constructionist Research. Technology Knowledge and Learning, 19, 205–220.

Bhatt, G. D. (2001). Knowledge Management in Organizations: Examining the Interaction between technologies, techniques, and people. Journal of Knowledge Management.

Bishop, C. M., & Nasrabadi, N. M. (2006). Pattern Recognition and Machine Learning (Vol. 4). Springer.

Biswas, S. S. (2023a). Potential use of Chat Gpt in global warming. Annals of Biomedical Engineering, 51(6), 1126–1127.

Biswas, S. S. (2023b). Role of Chat Gpt in Public Health. Annals of Biomedical Engineering, 1–2.

Brill, T. M., Munoz, L., & Miller, R. J. (2019). Siri, Alexa, and other Digital assistants: A study of customer satisfaction with Artificial Intelligence Applications. Journal of Marketing Management, 35(15–16), 1401–1436.

Cao, G., Duan, Y., Edwards, J. S., & Dwivedi, Y. K. (2021). Understanding managers’ attitudes and behavioral intentions towards using Artificial Intelligence for Organizational decision-making. Technovation, 106, 102312.

Chao, C. M. (2019). Factors determining the behavioral intention to Use Mobile Learning: An application and extension of the Utaut Model. Frontiers in Psychology, 10, 1652.

Chen, Q., Gong, Y., Lu, Y., & Tang, J. (2022). Classifying and measuring the Service Quality of Ai Chatbot in Frontline Service. Journal of Business Research, 145, 552–568.

Chung, M., Ko, E., Joung, H., & Kim, S. J. (2020). Chatbot E-Service and customer satisfaction regarding luxury brands. Journal of Business Research, 117, 587–595.

Cialdini, R. B., Darby, B. L., & Vincent, J. E. (1973). Transgression and altruism: A case for Hedonism. Journal of Experimental Social Psychology, 9(6), 502–516.

Crawford, J., Butler-Henderson, K., Rudolph, J., Malkawi, B., Glowatz, M., Burton, R., Magni, P., & Lam, S. (2020). Covid-19: 20 countries’ higher education Intra-period Digital pedagogy responses. Journal of Applied Learning & Teaching, 3(1), 1–20.

D’mello, S., & Graesser, A. (2013). Autotutor and Affective Autotutor: Learning by talking with cognitively and emotionally Intelligent computers that talk back. ACM Transactions on Interactive Intelligent Systems (TiiS), 2(4), 1–39.

Davis, F. D. (1989). Perceived usefulness, perceived ease of Use, and user Acceptance of Information Technology. MIS Quarterly, 13(3), 319–340.

de Cosmo, L. M., Piper, L., & Di Vittorio, A. (2021). The role of attitude toward chatbots and privacy concern on the relationship between attitude toward Mobile Advertising and behavioral intent to Use Chatbots. Italian Journal of Marketing, 2021(1), 83–102. https://doi.org/10.1007/s43039-021-00020-1.

Desaid, D. (2020). A study of personalization effect. on Users Satisfaction with E Commerce Websites.

Dienlin, T., & Metzger, M. J. (2016). An extended privacy Calculus model for Snss: Analyzing Self-Disclosure and Self-Withdrawal in a Representative U.S. Sample. Journal of Computer-Mediated Communication, 21(5), 368–383. https://doi.org/10.1111/jcc4.12163.

Dinev, T., & Hart, P. (2006). An extended privacy Calculus model for E-Commerce transactions. Information Systems Research, 17(1), 61–80.

Essel, H. B., Vlachopoulos, D., Tachie-Menson, A., Johnson, E. E., & Baah, P. K. (2022). The impact of a virtual teaching Assistant (Chatbot) on students’ learning in Ghanaian higher education. International Journal of Educational Technology in Higher Education, 19(1), 1–19.

Fauzi, F., Tuhuteru, L., Sampe, F., Ausat, A. M. A., & Hatta, H. R. (2023). Analysing the role of Chatgpt in improving Student Productivity in Higher Education. Journal on Education, 5(4), 14886–14891.

Firat, M. (2023). How Chat Gpt Can Transform Autodidactic Experiences and Open Education. Department of Distance Education, Open Education Faculty, Anadolu Unive.

Fishbein, M., & Ajzen, I. (1975). Belief, attitude, intention, and Behavior: An introduction to theory and research. Addison-Wesley.

Gatzioufa, P., & Saprikis, V. (2022). A Literature Review on Users’ Behavioral Intention toward Chatbots’ Adoption. Applied Computing and Informatics, ahead-of-print(ahead-of-print). https://doi.org/10.1108/ACI-01-2022-0021.

Grant, R. M. (1996). Toward a knowledge-based theory of the firm. Strategic Management Journal, 17(S2), 109–122.

Hair, J. F., Sarstedt, M., Hopkins, L., & Kuppelwieser, V. G. (2014). Partial least squares structural equation modeling (Pls-Sem): An Emerging Tool in Business Research. European Business Review, 26(2), 106–121.

Hair, J. F., Hult, G. T. M., Ringle, C. M., & Sarstedt, M. (2021). A primer on partial least squares structural equation modeling (Pls-Sem). Sage.

Haleem, A., Javaid, M., & Singh, R. P. (2022). An era of Chatgpt as a significant futuristic support Tool: A study on features, abilities, and challenges. BenchCouncil Transactions on Benchmarks Standards and Evaluations, 2(4), 100089. https://doi.org/10.1016/j.tbench.2023.100089.

Hasan, R., Shams, R., & Rahman, M. (2021). Consumer Trust and Perceived Risk for Voice-controlled Artificial Intelligence: The case of Siri. Journal of Business Research, 131, 591–597.

Heisig, P. (2009). Harmonisation of Knowledge management–comparing 160 km frameworks around the Globe. Journal of Knowledge Management, 13(4), 4–31.

Heo, M., & Lee, K. J. (2018). Chatbot as a New Business Communication Tool: The case of Naver Talktalk. Business Communication Research and Practice, 1(1), 41–45.