- Research article

- Open access

- Published:

Artificial intelligence in higher education: the state of the field

International Journal of Educational Technology in Higher Education volume 20, Article number: 22 (2023)

Abstract

This systematic review provides unique findings with an up-to-date examination of artificial intelligence (AI) in higher education (HE) from 2016 to 2022. Using PRISMA principles and protocol, 138 articles were identified for a full examination. Using a priori, and grounded coding, the data from the 138 articles were extracted, analyzed, and coded. The findings of this study show that in 2021 and 2022, publications rose nearly two to three times the number of previous years. With this rapid rise in the number of AIEd HE publications, new trends have emerged. The findings show that research was conducted in six of the seven continents of the world. The trend has shifted from the US to China leading in the number of publications. Another new trend is in the researcher affiliation as prior studies showed a lack of researchers from departments of education. This has now changed to be the most dominant department. Undergraduate students were the most studied students at 72%. Similar to the findings of other studies, language learning was the most common subject domain. This included writing, reading, and vocabulary acquisition. In examination of who the AIEd was intended for 72% of the studies focused on students, 17% instructors, and 11% managers. In answering the overarching question of how AIEd was used in HE, grounded coding was used. Five usage codes emerged from the data: (1) Assessment/Evaluation, (2) Predicting, (3) AI Assistant, (4) Intelligent Tutoring System (ITS), and (5) Managing Student Learning. This systematic review revealed gaps in the literature to be used as a springboard for future researchers, including new tools, such as Chat GPT.

Highlights

-

A systematic review examining AIEd in higher education (HE) up to the end of 2022.

-

Unique findings in the switch from US to China in the most studies published.

-

A two to threefold increase in studies published in 2021 and 2022 to prior years.

-

AIEd was used for: Assessment/Evaluation, Predicting, AI Assistant, Intelligent Tutoring System, and Managing Student Learning.

Introduction

The use of artificial intelligence (AI) in higher education (HE) has risen quickly in the last 5 years (Chu et al., 2022), with a concomitant proliferation of new AI tools available. Scholars (viz., Chen et al., 2020; Crompton et al., 2020, 2021) report on the affordances of AI to both instructors and students in HE. These benefits include the use of AI in HE to adapt instruction to the needs of different types of learners (Verdú et al., 2017), in providing customized prompt feedback (Dever et al., 2020), in developing assessments (Baykasoğlu et al., 2018), and predict academic success (Çağataylı & Çelebi, 2022). These studies help to inform educators about how artificial intelligence in education (AIEd) can be used in higher education.

Nonetheless, a gap has been highlighted by scholars (viz., Hrastinski et al., 2019; Zawacki-Richter et al., 2019) regarding an understanding of the collective affordances provided through the use of AI in HE. Therefore, the purpose of this study is to examine extant research from 2016 to 2022 to provide an up-to-date systematic review of how AI is being used in the HE context.

Background

Artificial intelligence has become pervasive in the lives of twenty-first century citizens and is being proclaimed as a tool that can be used to enhance and advance all sectors of our lives (Górriz et al., 2020). The application of AI has attracted great interest in HE which is highly influenced by the development of information and communication technologies (Alajmi et al., 2020). AI is a tool used across subject disciplines, including language education (Liang et al., 2021), engineering education (Shukla et al., 2019), mathematics education (Hwang & Tu, 2021) and medical education (Winkler-Schwartz et al., 2019),

Artificial intelligence

The term artificial intelligence is not new. It was coined in 1956 by McCarthy (Cristianini, 2016) who followed up on the work of Turing (e.g., Turing, 1937, 1950). Turing described the existence of intelligent reasoning and thinking that could go into intelligent machines. The definition of AI has grown and changed since 1956, as there has been significant advancements in AI capabilities. A current definition of AI is “computing systems that are able to engage in human-like processes such as learning, adapting, synthesizing, self-correction and the use of data for complex processing tasks” (Popenici et al., 2017, p. 2). The interdisciplinary interest from scholars from linguistics, psychology, education, and neuroscience who connect AI to nomenclature, perceptions and knowledge in their own disciplines could create a challenge when defining AI. This has created the need to create categories of AI within specific disciplinary areas. This paper focuses on the category of AI in Education (AIEd) and how AI is specifically used in higher educational contexts.

As the field of AIEd is growing and changing rapidly, there is a need to increase the academic understanding of AIEd. Scholars (viz., Hrastinski et al., 2019; Zawacki-Richter et al., 2019) have drawn attention to the need to increase the understanding of the power of AIEd in educational contexts. The following section provides a summary of the previous research regarding AIEd.

Extant systematic reviews

This growing interest in AIEd has led scholars to investigate the research on the use of artificial intelligence in education. Some scholars have conducted systematic reviews to focus on a specific subject domain. For example, Liang et. al. (2021) conducted a systematic review and bibliographic analysis the roles and research foci of AI in language education. Shukla et. al. (2019) focused their longitudinal bibliometric analysis on 30 years of using AI in Engineering. Hwang and Tu (2021) conducted a bibliometric mapping analysis on the roles and trends in the use of AI in mathematics education, and Winkler-Schwartz et. al. (2019) specifically examined the use of AI in medical education in looking for best practices in the use of machine learning to assess surgical expertise. These studies provide a specific focus on the use of AIEd in HE but do not provide an understanding of AI across HE.

On a broader view of AIEd in HE, Ouyang et. al. (2022) conducted a systematic review of AIEd in online higher education and investigated the literature regarding the use of AI from 2011 to 2020. The findings show that performance prediction, resource recommendation, automatic assessment, and improvement of learning experiences are the four main functions of AI applications in online higher education. Salas-Pilco and Yang (2022) focused on AI applications in Latin American higher education. The results revealed that the main AI applications in higher education in Latin America are: (1) predictive modeling, (2) intelligent analytics, (3) assistive technology, (4) automatic content analysis, and (5) image analytics. These studies provide valuable information for the online and Latin American context but not an overarching examination of AIEd in HE.

Studies have been conducted to examine HE. Hinojo-Lucena et. al. (2019) conducted a bibliometric study on the impact of AIEd in HE. They analyzed the scientific production of AIEd HE publications indexed in Web of Science and Scopus databases from 2007 to 2017. This study revealed that most of the published document types were proceedings papers. The United States had the highest number of publications, and the most cited articles were about implementing virtual tutoring to improve learning. Chu et. al. (2022) reviewed the top 50 most cited articles on AI in HE from 1996 to 2020, revealing that predictions of students’ learning status were most frequently discussed. AI technology was most frequently applied in engineering courses, and AI technologies most often had a role in profiling and prediction. Finally, Zawacki-Richter et. al. (2019) analyzed AIEd in HE from 2007 to 2018 to reveal four primary uses of AIEd: (1) profiling and prediction, (2) assessment and evaluation, (3) adaptive systems and personalization, and (4) intelligent tutoring systems. There do not appear to be any studies examining the last 2 years of AIEd in HE, and these authors describe the rapid speed of both AI development and the use of AIEd in HE and call for further research in this area.

Purpose of the study

The purpose of this study is in response to the appeal from scholars (viz., Chu et al., 2022; Hinojo-Lucena et al., 2019; Zawacki-Richter et al., 2019) to research to investigate the benefits and challenges of AIEd within HE settings. As the academic knowledge of AIEd HE finished with studies examining up to 2020, this study provides the most up-to-date analysis examining research through to the end of 2022.

The overarching question for this study is: what are the trends in HE research regarding the use of AIEd? The first two questions provide contextual information, such as where the studies occurred and the disciplines AI was used in. These contextual details are important for presenting the main findings of the third question of how AI is being used in HE.

-

1.

In what geographical location was the AIEd research conducted, and how has the trend in the number of publications evolved across the years?

-

2.

What departments were the first authors affiliated with, and what were the academic levels and subject domains in which AIEd research was being conducted?

-

3.

Who are the intended users of the AI technologies and what are the applications of AI in higher education?

Method

A PRISMA systematic review methodology was used to answer three questions guiding this study. PRISMA principles (Page et al., 2021) were used throughout the study. The PRISMA extension Preferred Reporting Items for Systematic Reviews and Meta-Analysis for Protocols (PRISMA-P; Moher et al., 2015) were utilized in this study to provide an a priori roadmap to conduct a rigorous systematic review. Furthermore, the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA principles; Page et al., 2021) were used to search, identify, and select articles to be included in the research were used for searching, identifying, and selecting articles, then in how to read, extract, and manage the secondary data gathered from those studies (Moher et al., 2015, PRISMA Statement, 2021). This systematic review approach supports an unbiased synthesis of the data in an impartial way (Hemingway & Brereton, 2009). Within the systematic review methodology, extracted data were aggregated and presented as whole numbers and percentages. A qualitative deductive and inductive coding methodology was also used to analyze extant data and generate new theories on the use of AI in HE (Gough et al., 2017).

The research begins with the search for the research articles to be included in the study. Based on the research question, the study parameters are defined including the search years, quality and types of publications to be included. Next, databases and journals are selected. A Boolean search is created and used for the search of those databases and journals. Once a set of publications are located from those searches, they are then examined against an inclusion and exclusion criteria to determine which studies will be included in the final study. The relevant data to match the research questions is then extracted from the final set of studies and coded. This method section is organized to describe each of these methods with full details to ensure transparency.

Search strategy

Only peer-reviewed journal articles were selected for examination in this systematic review. This ensured a level of confidence in the quality of the studies selected (Gough et al., 2017). The search parameters narrowed the search focus to include studies published in 2016 to 2022. This timeframe was selected to ensure the research was up to date, which is especially important with the rapid change in technology and AIEd.

Search

The data retrieval protocol employed an electronic and a hand search. The electronic search included educational databases within EBSCOhost. Then an additional electronic search was conducted of Wiley Online Library, JSTOR, Science Direct, and Web of Science. Within each of these databases a full text search was conducted. Aligned to the research topic and questions, the Boolean search included terms related to AI, higher education, and learning. The Boolean search is listed in Table 1. In the initial test search, the terms “machine learning” OR “intelligent support” OR “intelligent virtual reality” OR “chatbot” OR “automated tutor” OR “intelligent agent” OR “expert system” OR “neural network” OR “natural language processing” were used. These were removed as they were subcategories of terms found in Part 1 of the search. Furthermore, inclusion of these specific AI terms resulted in a large number of computer science courses that were focused on learning about AI and not the use of AI in learning.

Part 2 of the search ensured that articles involved formal university education. The terms higher education and tertiary were both used to recognize the different terms used in different countries. The final Boolean search was “Artificial intelligence” OR AI OR “smart technologies” OR “intelligent technologies” AND “higher education” OR tertiary OR graduate OR undergraduate. Scholars (viz., Ouyang et al., 2022) who conducted a systematic review on AIEd in HE up to 2020 noted that they missed relevant articles from their study, and other relevant journals should intentionally be examined. Therefore, a hand search was also conducted to include an examination of other journals relevant to AIEd that may not be included in the databases. This is important as the field of AIEd is still relatively new, and journals focused on this field may not yet be indexed in databases. The hand search included: The International Journal of Learning Analytics and Artificial Intelligence in Education, the International Journal of Artificial Intelligence in Education, and Computers & Education: Artificial Intelligence.

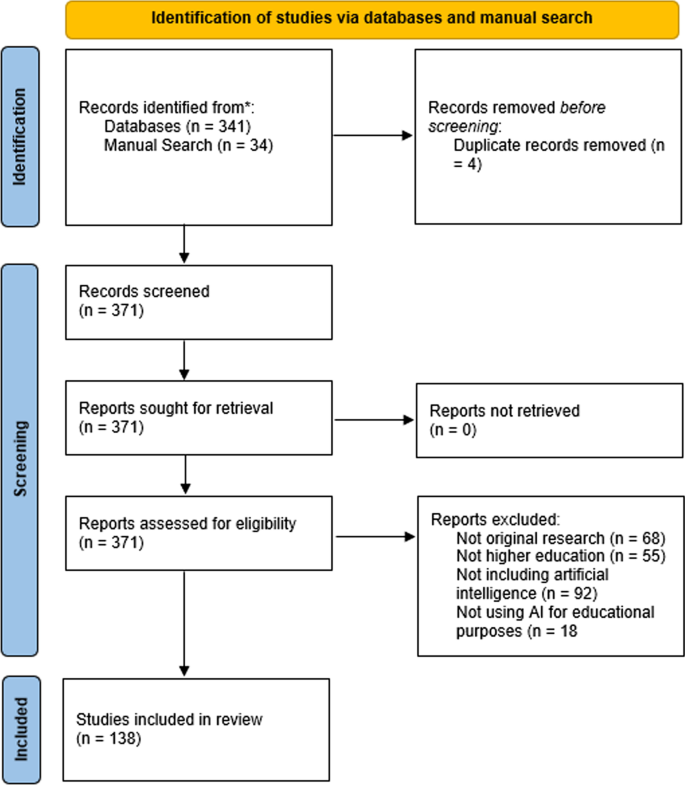

Screening

Electronic and hand searches resulted in 371 articles for possible inclusion. The search parameters within the electronic database search narrowed the search to articles published from 2016 to 2022, per-reviewed journal articles, and duplicates. Further screening was conducted manually, as each of the 138 articles were reviewed in full by two researchers to examine a match against the inclusion and exclusion criteria found in Table 2.

The inter-rater reliability was calculated by percentage agreement (Belur et al., 2018). The researchers reached a 95% agreement for the coding. Further discussion of misaligned articles resulted in a 100% agreement. This screening process against inclusion and exclusion criteria resulted in the exclusion of 237 articles. This included the duplicates and those removed as part of the inclusion and exclusion criteria, see Fig. 1. Leaving 138 articles for inclusion in this systematic review.

(From: Page et al., 2021)

PRISMA flow chart of article identification and screening

Coding

The 138 articles were then coded to answer each of the research questions using deductive and inductive coding methods. Deductive coding involves examining data using a priori codes. A priori are pre-determined criteria and this process was used to code the countries, years, author affiliations, academic levels, and domains in the respective groups. Author affiliations were coded using the academic department of the first author of the study. First authors were chosen as that person is the primary researcher of the study and this follows past research practice (e.g., Zawacki-Richter et al., 2019). Who the AI was intended for was also coded using the a priori codes of Student, Instructor, Manager or Others. The Manager code was used for those who are involved in organizational tasks, e.g., tracking enrollment. Others was used for those not fitting the other three categories.

Inductive coding was used for the overarching question of this study in examining how the AI was being used in HE. Researchers of extant systematic reviews on AIEd in HE (viz., Chu et al., 2022; Zawacki-Richter et al., 2019) often used an a priori framework as researchers matched the use of AI to pre-existing frameworks. A grounded coding methodology (Strauss & Corbin, 1995) was selected for this study to allow findings of the trends on AIEd in HE to emerge from the data. This is important as it allows a direct understanding of how AI is being used rather than how researchers may think it is being used and fitting the data to pre-existing ideas.

Grounded coding process involved extracting how the AI was being used in HE from the articles. “In vivo” (Saldana, 2015) coding was also used alongside grounded coding. In vivo codes are when codes use language directly from the article to capture the primary authors’ language and ensure consistency with their findings. The grounded coding design used a constant comparative method. Researchers identified important text from articles related to the use of AI, and through an iterative process, initial codes led to axial codes with a constant comparison of uses of AI with uses of AI, then of uses of AI with codes, and codes with codes. Codes were deemed theoretically saturated when the majority of the data fit with one of the codes. For both the a priori and the grounded coding, two researchers coded and reached an inter-rater percentage agreement of 96%. After discussing misaligned articles, a 100% agreement was achieved.

Findings and discussion

The findings and discussion section are organized by the three questions guiding this study. The first two questions provide contextual information on the AIEd research, and the final question provides a rigorous investigation into how AI is being used in HE.

RQ1. In what geographical location was the AIEd research conducted, and how has the trend in the number of publications evolved across the years?

Countries

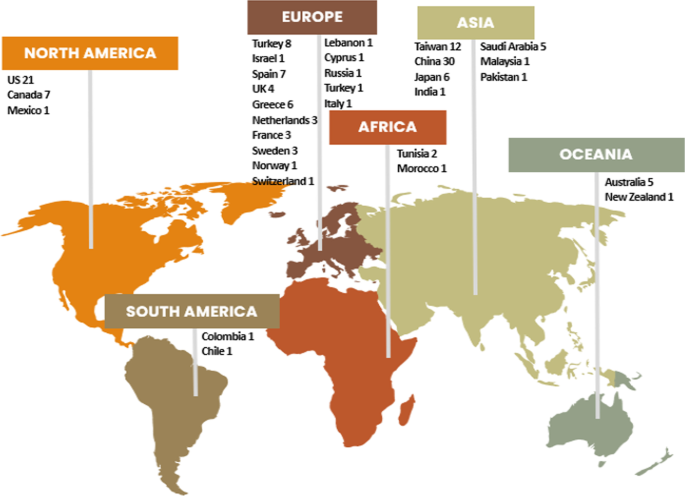

The 138 studies took place across 31 countries in six of seven continents of the world. Nonetheless, that distribution was not equal across continents. Asia had the largest number of AIEd studies in HE at 41%. Of the seven countries represented in Asia, 42 of the 58 studies were conducted in Taiwan and China. Europe, at 30%, was the second largest continent and had 15 countries ranging from one to eight studies a piece. North America, at 21% of the studies was the continent with the third largest number of studies, with the USA producing 21 of the 29 studies in that continent. The 21 studies from the USA places it second behind China. Only 1% of studies were conducted in South America and 2% in Africa. See Fig. 2 for a visual representation of study distribution across countries. Those continents with high numbers of studies are from high income countries and those with low numbers have a paucity of publications in low-income countries.

Data from Zawacki-Richter et. al.’s (2019) 2007–2018 systematic review examining countries found that the USA conducted the most studies across the globe at 43 out of 146, and China had the second largest at eleven of the 146 papers. Researchers have noted a rapid trend in Chinese researchers publishing more papers on AI and securing more patents than their US counterparts in a field that was originally led by the US (viz., Li et al., 2021). The data from this study corroborate this trend in China leading in the number of AIEd publications.

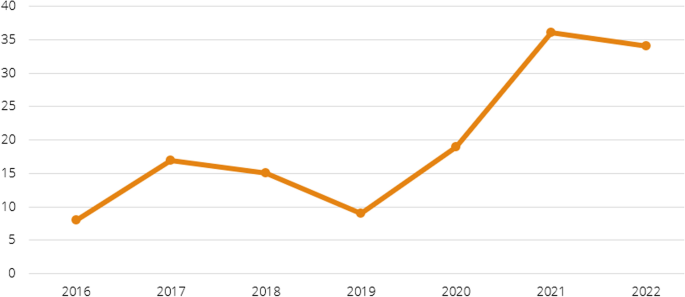

Years

With the accelerated use of AI in society, gathering data to examine the use of AIEd in HE is useful in providing the scholarly community with specific information on that growth and if it is as prolific as anticipated by scholars (e.g., Chu et al., 2022). The analysis of data of the 138 studies shows that the trend towards the use of AIEd in HE has greatly increased. There is a drop in 2019, but then a great rise in 2021 and 2022; see Fig. 3.

Data on the rise in AIEd in HE is similar to the findings of Chu et. al. (2022) who noted an increase from 1996 to 2010 and 2011–2020. Nonetheless Chu’s parameters are across decades, and the rise is to be anticipated with a relatively new technology across a longitudinal review. Data from this study show a dramatic rise since 2020 with a 150% increase from the prior 2 years 2020–2019. The rise in 2021 and 2022 in HE could have been caused by the vast increase in HE faculty having to teach with technology during the pandemic lockdown. Faculty worldwide were using technologies, including AI, to explore how they could continue teaching and learning that was often face-to-face prior to lockdown. The disadvantage of this rapid adoption of technology is that there was little time to explore the possibilities of AI to transform learning, and AI may have been used to replicate past teaching practices, without considering new strategies previously inconceivable with the affordances of AI.

However, in a further examination of the research from 2021 to 2022, it appears that there are new strategies being considered. For example, Liu et. al.’s, 2022 study used AIEd to provide information on students’ interactions in an online environment and examine their cognitive effort. In Yao’s study in 2022, he examined the use of AI to determine student emotions while learning.

RQ2. What departments were the first authors affiliated with, and what were the academic levels and subject domains in which AIEd research was being conducted?

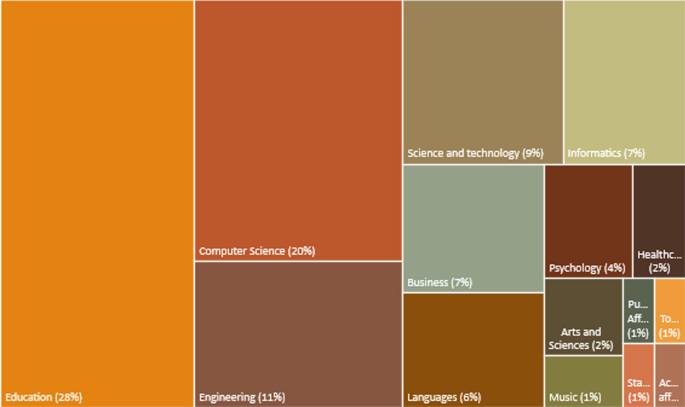

Department affiliations

Data from the AIEd HE studies show that of the first authors were most frequently from colleges of education (28%), followed by computer science (20%). Figure 4 presents the 15 academic affiliations of the authors found in the studies. The wide variety of affiliations demonstrate the variety of ways AI can be used in various educational disciplines, and how faculty in diverse areas, including tourism, music, and public affairs were interested in how AI can be used for educational purposes.

In an extant AIED HE systematic review, Zawacki-Richter et. al.’s (2019) named their study Systematic review of research on artificial intelligence applications in higher education—where are the educators? In this study, the authors were keen to highlight that of the AIEd studies in HE, only six percent were written by researchers directly connected to the field of education, (i.e., from a college of education). The researchers found a great lack in pedagogical and ethical implications of implementing AI in HE and that there was a need for more educational perspectives on AI developments from educators conducting this work. It appears from our data that educators are now showing greater interest in leading these research endeavors, with the highest affiliated group belonging to education. This may again be due to the pandemic and those in the field of education needing to support faculty in other disciplines, and/or that they themselves needed to explore technologies for their own teaching during the lockdown. This may also be due to uptake in professors in education becoming familiar with AI tools also driven by a societal increased attention. As the focus of much research by education faculty is on teaching and learning, they are in an important position to be able to share their research with faculty in other disciplines regarding the potential affordances of AIEd.

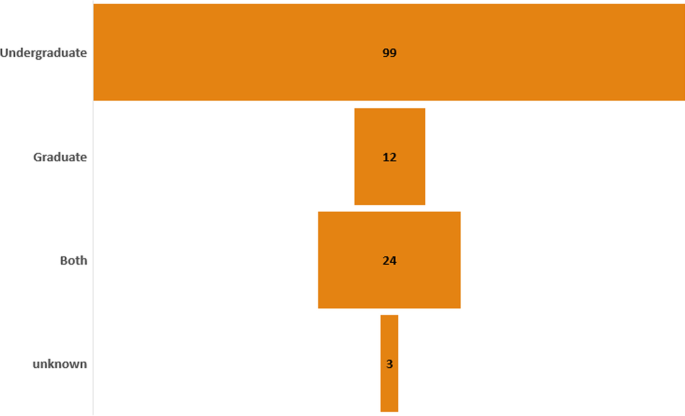

Academic levels

The a priori coding of academic levels show that the majority of studies involved undergraduate students with 99 of the 138 (72%) focused on these students. This was in comparison to the 12 of 138 (9%) for graduate students. Some of the studies used AI for both academic levels: see Fig. 5

This high percentage of studies focused on the undergraduate population was congruent with an earlier AIED HE systematic review (viz., Zawacki-Richter et al., 2019) who also reported student academic levels. This focus on undergraduate students may be due to the variety of affordances offered by AIEd, such as predictive analytics on dropouts and academic performance. These uses of AI may be less required for graduate students who already have a record of performance from their undergraduate years. Another reason for this demographic focus can also be convenience sampling, as researchers in HE typically has a much larger and accessible undergraduate population than graduates. This disparity between undergraduates and graduate populations is a concern, as AIEd has the potential to be valuable in both settings.

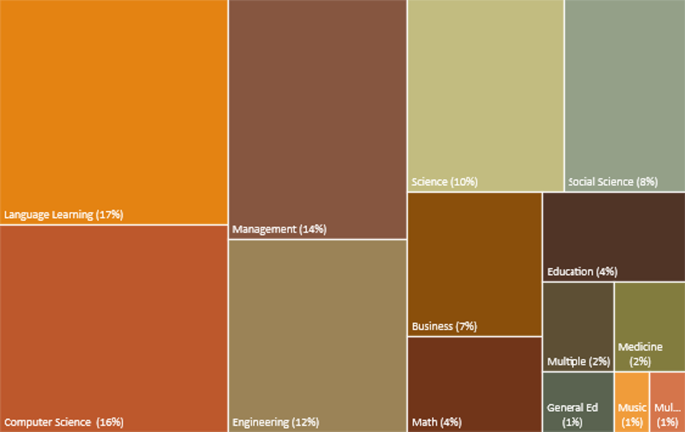

Subject domains

The studies were coded into 14 areas in HE; with 13 in a subject domain and one category of AIEd used in HE management of students; See Fig. 6. There is not a wide difference in the percentages of top subject domains, with language learning at 17%, computer science at 16%, and engineering at 12%. The management of students category appeared third on the list at 14%. Prior studies have also found AIEd often used for language learning (viz., Crompton et al., 2021; Zawacki-Richter et al., 2019). These results are different, however, from Chu et. al.’s (2022) findings that show engineering dramatically leading with 20 of the 50 studies, with other subjects, such as language learning, appearing once or twice. This study appears to be an outlier that while the searches were conducted in similar databases, the studies only included 50 studies from 1996 to 2020.

Previous scholars primarily focusing on language learning using AI for writing, reading, and vocabulary acquisition used the affordances of natural language processing and intelligent tutoring systems (e.g., Liang et al., 2021). This is similar to the findings in studies with AI used for automated feedback of writing in a foreign language (Ayse et al., 2022), and AI translation support (Al-Tuwayrish, 2016). The large use of AI for managerial activities in this systematic review focused on making predictions (12 studies) and then admissions (three studies). This is positive to see this use of AI to look across multiple databases to see trends emerging from data that may not have been anticipated and cross referenced before (Crompton et al., 2022). For example, to examine dropouts, researchers may consider examining class attendance, and may not examine other factors that appear unrelated. AI analysis can examine all factors and may find that dropping out is due to factors beyond class attendance.

RQ3. Who are the intended users of the AI technologies and what are the applications of AI in higher education?

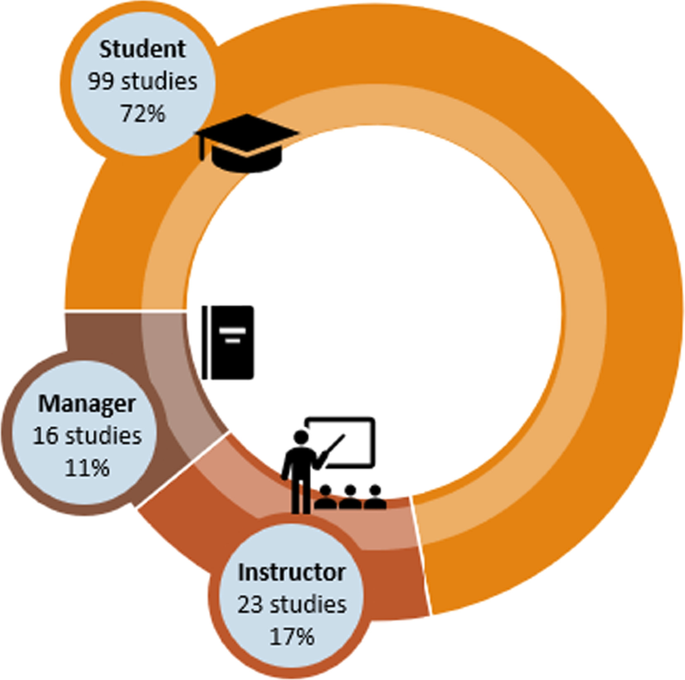

Intended user of AI

Of the 138 articles, the a priori coding shows that 72% of the studies focused on Students, followed by a focus on Instructors at 17%, and Managers at 11%, see Fig. 7. The studies provided examples of AI being used to provide support to students, such as access to learning materials for inclusive learning (Gupta & Chen, 2022), provide immediate answers to student questions, self-testing opportunities (Yao, 2022), and instant personalized feedback (Mousavi et al., 2020).

The data revealed a large emphasis on students in the use of AIEd in HE. This user focus is different from a recent systematic review on AIEd in K-12 that found that AIEd studies in K-12 settings prioritized teachers (Crompton et al., 2022). This may appear that HE uses AI to focus more on students than in K-12. However, this large number of student studies in HE may be due to the student population being more easily accessibility to HE researchers who may study their own students. The ethical review process is also typically much shorter in HE than in K-12. Therefore, the data on the intended focus should be reviewed while keeping in mind these other explanations. It was interesting that Managers were the lowest focus in K-12 and also in this study in HE. AI has great potential to collect, cross reference and examine data across large datasets that can allow data to be used for actionable insight. More focus on the use of AI by managers would tap into this potential.

How is AI used in HE

Using grounded coding, the use of AIEd from each of the 138 articles was examined and six major codes emerged from the data. These codes provide insight into how AI was used in HE. The five codes are: (1) Assessment/Evaluation, (2) Predicting, (3) AI Assistant, (4) Intelligent Tutoring System (ITS), and (5) Managing Student Learning. For each of these codes there are also axial codes, which are secondary codes as subcategories from the main category. Each code is delineated below with a figure of the codes with further descriptive information and examples.

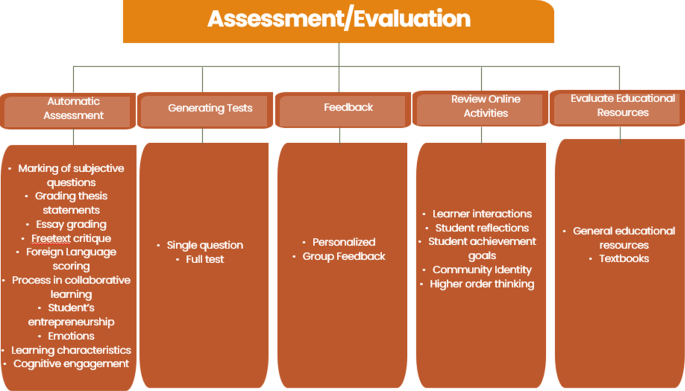

Assessment/evaluation

Assessment and Evaluation was the most common use of AIEd in HE. Within this code there were six axial codes broken down into further codes; see Fig. 8. Automatic assessment was most common, seen in 26 of the studies. It was interesting to see that this involved assessment of academic achievement, but also other factors, such as affect.

Automatic assessment was used to support a variety of learners in HE. As well as reducing the time it takes for instructors to grade (Rutner & Scott, 2022), automatic grading showed positive use for a variety of students with diverse needs. For example, Zhang and Xu (2022) used automatic assessment to improve academic writing skills of Uyghur ethnic minority students living in China. Writing has a variety of cultural nuances and in this study the students were shown to engage with the automatic assessment system behaviorally, cognitively, and affectively. This allowed the students to engage in self-regulated learning while improving their writing.

Feedback was a description often used in the studies, as students were given text and/or images as feedback as a formative evaluation. Mousavi et. al. (2020) developed a system to provide first year biology students with an automated personalized feedback system tailored to the students’ specific demographics, attributes, and academic status. With the unique feature of AIEd being able to analyze multiple data sets involving a variety of different students, AI was used to assess and provide feedback on students’ group work (viz., Ouatik et al., 2021).

AI also supports instructors in generating questions and creating multiple question tests (Yang et al., 2021). For example, (Lu et al., 2021) used natural language processing to create a system that automatically created tests. Following a Turing type test, researchers found that AI technologies can generate highly realistic short-answer questions. The ability for AI to develop multiple questions is a highly valuable affordance as tests can take a great deal of time to make. However, it would be important for instructors to always confirm questions provided by the AI to ensure they are correct and that they match the learning objectives for the class, especially in high value summative assessments.

The axial code within assessment and evaluation revealed that AI was used to review activities in the online space. This included evaluating student’s reflections, achievement goals, community identity, and higher order thinking (viz., Huang et al., 2021). Three studies used AIEd to evaluate educational materials. This included general resources and textbooks (viz., Koć‑Januchta et al., 2022). It is interesting to see the use of AI for the assessment of educational products, rather than educational artifacts developed by students. While this process may be very similar in nature, this shows researchers thinking beyond the traditional use of AI for assessment to provide other affordances.

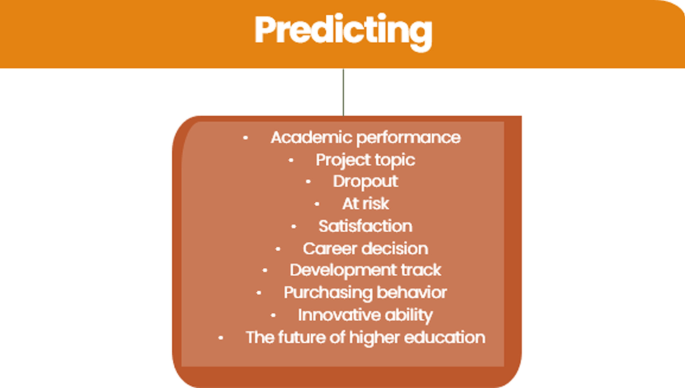

Predicting

Predicting was a common use of AIEd in HE with 21 studies focused specifically on the use of AI for forecasting trends in data. Ten axial codes emerged on the way AI was used to predict different topics, with nine focused on predictions regarding students and the other on predicting the future of higher education. See Fig. 9.

Extant systematic reviews on HE highlighted the use of AIEd for prediction (viz., Chu et al., 2022; Hinojo-Lucena et al., 2019; Ouyang et al., 2022; Zawacki-Richter et al., 2019). Ten of the articles in this study used AI for predicting academic performance. Many of the axial codes were often overlapping, such as predicting at risk students, and predicting dropouts; however, each provided distinct affordances. An example of this is the study by Qian et. al. (2021). These researchers examined students taking a MOOC course. MOOCs can be challenging environments to determine information on individual students with the vast number of students taking the course (Krause & Lowe, 2014). However, Qian et al., used AIEd to predict students’ future grades by inputting 17 different learning features, including past grades, into an artificial neural network. The findings were able to predict students’ grades and highlight students at risk of dropping out of the course.

In a systematic review on AIEd within the K-12 context (viz., Crompton et al., 2022), prediction was less pronounced in the findings. In the K-12 setting, there was a brief mention of the use of AI in predicting student academic performance. One of the studies mentioned students at risk of dropping out, but this was immediately followed by questions about privacy concerns and describing this as “sensitive”. The use of prediction from the data in this HE systematic review cover a wide range of AI predictive affordances. students Sensitivity is still important in a HE setting, but it is positive to see the valuable insight it provides that can be used to avoid students failing in their goals.

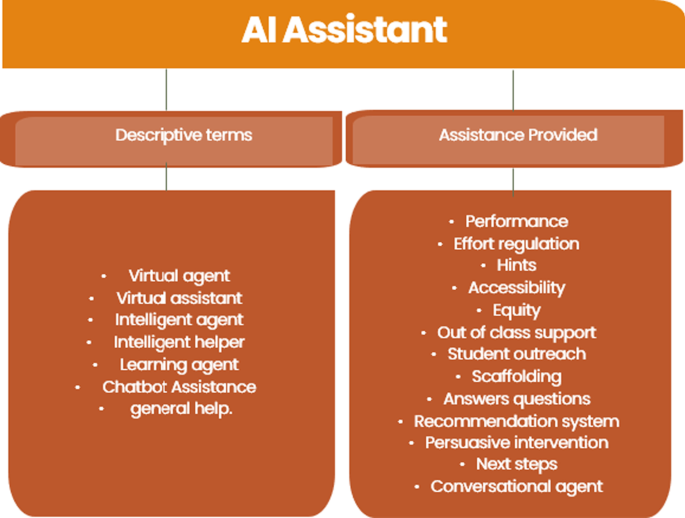

AI assistant

The studies evaluated in this review indicated that the AI Assistant used to support learners had a variety of different names. This code included nomenclature such as, virtual assistant, virtual agent, intelligent agent, intelligent tutor, and intelligent helper. Crompton et. al. (2022), described the difference in the terms to delineate the way that the AI appeared to the user. For example, if there was an anthropomorphic presence to the AI, such as an avatar, or if the AI appeared to support via other means, such as text prompt. The findings of this systematic review align to Crompton et. al.’s (2022) descriptive differences of the AI Assistant. Furthermore, this code included studies that provide assistance to students, but may not have specifically used the word assistance. These include the use of chatbots for student outreach, answering questions, and providing other assistance. See Fig. 10 for the axial codes for AI Assistant.

Many of these assistants offered multiple supports to students, such as Alex, the AI described as a virtual change agent in Kim and Bennekin’s (2016) study. Alex interacted with students in a college mathematics course by asking diagnostic questions and gave support depending on student needs. Alex’s support was organized into four stages: (1) goal initiation (“Want it”), (2) goal formation (“Plan for it”), (3) action control (“Do it”), and (4) emotion control (“Finish it”). Alex provided responses depending on which of these four areas students needed help. These messages supported students with the aim of encouraging persistence in pursuing their studies and degree programs and improving performance.

The role of AI in providing assistance connects back to the seminal work of Vygotsky (1978) and the Zone of Proximal Development (ZPD). ZPD highlights the degree to which students can rapidly develop when assisted. Vygotsky described this assistance often in the form of a person. However, with technological advancements, the use of AI assistants in these studies are providing that support for students. The affordances of AI can also ensure that the support is timely without waiting for a person to be available. Also, assistance can consider aspects on students’ academic ability, preferences, and best strategies for supporting. These features were evident in Kim and Bennekin’s (2016) study using Alex.

Intelligent tutoring system

The use of Intelligent Tutoring Systems (ITS) was revealed in the grounded coding. ITS systems are adaptive instructional systems that involve the use of AI techniques and educational methods. An ITS system customizes educational activities and strategies based on student’s characteristics and needs (Mousavinasab et al., 2021). While ITS may be an anticipated finding in AIED HE systematic reviews, it was interesting that extant reviews similar to this study did not always describe their use in HE. For example, Ouyang et. al. (2022), included “intelligent tutoring system” in search terms describing it as a common technique, yet ITS was not mentioned again in the paper. Zawacki-Richter et. al. (2019) on the other hand noted that ITS was in the four overarching findings of the use of AIEd in HE. Chu et. al. (2022) then used Zawacki-Richter’s four uses of AIEd for their recent systematic review.

In this systematic review, 18 studies specifically mentioned that they were using an ITS. The ITS code did not necessitate axial codes as they were performing the same type of function in HE, namely, in providing adaptive instruction to the students. For example, de Chiusole et. al. (2020) developed Stat-Knowlab, an ITS that provides the level of competence and best learning path for each student. Thus Stat-Knowlab personalizes students’ learning and provides only educational activities that the student is ready to learn. This ITS is able to monitor the evolution of the learning process as the student interacts with the system. In another study, Khalfallah and Slama (2018) built an ITS called LabTutor for engineering students. LabTutor served as an experienced instructor in enabling students to access and perform experiments on laboratory equipment while adapting to the profile of each student.

The student population in university classes can go into the hundreds and with the advent of MOOCS, class sizes can even go into the thousands. Even in small classes of 20 students, the instructor cannot physically provide immediate unique personalize questions to each student. Instructors need time to read and check answers and then take further time to provide feedback before determining what the next question should be. Working with the instructor, AIEd can provide that immediate instruction, guidance, feedback, and following questioning without delay or becoming tired. This appears to be an effective use of AIEd, especially within the HE context.

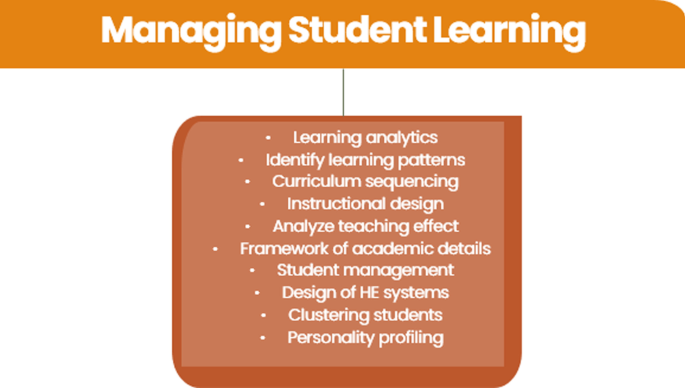

Managing student learning

Another code that emerged in the grounded coding was focused on the use of AI for managing student learning. AI is accessed to manage student learning by the administrator or instructor to provide information, organization, and data analysis. The axial codes reveal the trends in the use of AI in managing student learning; see Fig. 11.

Learning analytics was an a priori term often found in studies which describes “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (Long & Siemens, 2011, p. 34). The studies investigated in this systematic review were across grades and subject areas and provided administrators and instructors different types of information to guide their work. One of those studies was conducted by Mavrikis et. al. (2019) who described learning analytics as teacher assistance tools. In their study, learning analytics were used in an exploratory learning environment with targeted visualizations supporting classroom orchestration. These visualizations, displayed as screenshots in the study, provided information such as the interactions between the students, goals achievements etc. These appear similar to infographics that are brightly colored and draw the eye quickly to pertinent information. AI is also used for other tasks, such as organizing the sequence of curriculum in pacing guides for future groups of students and also designing instruction. Zhang (2022) described how designing an AI teaching system of talent cultivation and using the digital affordances to establish a quality assurance system for practical teaching, provides new mechanisms for the design of university education systems. In developing such a system, Zhang found that the stability of the instructional design, overcame the drawbacks of traditional manual subjectivity in the instructional design.

Another trend that emerged from the studies was the use of AI to manage student big data to support learning. Ullah and Hafiz (2022) lament that using traditional methods, including non-AI digital techniques, asking the instructor to pay attention to every student’s learning progress is very difficult and that big data analysis techniques are needed. The ability to look across and within large data sets to inform instruction is a valuable affordance of AIEd in HE. While the use of AIEd to manage student learning emerged from the data, this study uncovered only 19 studies in 7 years (2016–2022) that focused on the use of AIEd to manage student data. This lack of the use was also noted in a recent study in the K-12 space (Crompton et al., 2022). In Chu et. al.’s (2022) study examining the top 50 most cited AIEd articles, they did not report the use of AIEd for managing student data in the top uses of AIEd HE. It would appear that more research should be conducted in this area to fully explore the possibilities of AI.

Gaps and future research

From this systematic review, six gaps emerged in the data providing opportunities for future studies to investigate and provide a fuller understanding of how AIEd can used in HE. (1) The majority of the research was conducted in high income countries revealing a paucity of research in developing countries. More research should be conducted in these developing countries to expand the level of understanding about how AI can enhance learning in under-resourced communities. (2) Almost 50% of the studies were conducted in the areas of language learning, computer science and engineering. Research conducted by members from multiple, different academic departments would help to advance the knowledge of the use of AI in more disciplines. (3) This study revealed that faculty affiliated with schools of education are taking an increasing role in researching the use of AIEd in HE. As this body of knowledge grows, faculty in Schools of Education should share their research regarding the pedagogical affordances of AI so that this knowledge can be applied by faculty across disciplines. (4) The vast majority of the research was conducted at the undergraduate level. More research needs to be done at the graduate student level, as AI provides many opportunities in this environment. (5) Little study was done regarding how AIEd can assist both instructors and managers in their roles in HE. The power of AI to assist both groups further research. (6) Finally, much of the research investigated in this systematic review revealed the use of AIEd in traditional ways that enhance or make more efficient current practices. More research needs to focus on the unexplored affordances of AIEd. As AI becomes more advanced and sophisticated, new opportunities will arise for AIEd. Researchers need to be on the forefront of these possible innovations.

In addition, empirical exploration is needed for new tools, such as ChatGPT that was available for public use at the end of 2022. With the time it takes for a peer review journal article to be published, ChatGPT did not appear in the articles for this study. What is interesting is that it could fit with a variety of the use codes found in this study, with students getting support in writing papers and instructors using Chat GPT to assess students work and with help writing emails or descriptions for students. It would be pertinent for researchers to explore Chat GPT.

Limitations

The findings of this study show a rapid increase in the number of AIEd studies published in HE. However, to ensure a level of credibility, this study only included peer review journal articles. These articles take months to publish. Therefore, conference proceedings and gray literature such as blogs and summaries may reveal further findings not explored in this study. In addition, the articles in this study were all published in English which excluded findings from research published in other languages.

Conclusion

In response to the call by Hinojo-Lucena et. al. (2019), Chu et. al. (2022), and Zawacki-Richter et. al. (2019), this study provides unique findings with an up-to-date examination of the use of AIEd in HE from 2016 to 2022. Past systematic reviews examined the research up to 2020. The findings of this study show that in 2021 and 2022, publications rose nearly two to three times the number of previous years. With this rapid rise in the number of AIEd HE publications, new trends have emerged.

The findings show that of the 138 studies examined, research was conducted in six of the seven continents of the world. In extant systematic reviews showed that the US led by a large margin in the number of studies published. This trend has now shifted to China. Another shift in AIEd HE is that while extant studies lamented the lack of focus on professors of education leading these studies, this systematic review found education to be the most common department affiliation with 28% and computer science coming in second at 20%. Undergraduate students were the most studied students at 72%. Similar to the findings of other studies, language learning was the most common subject domain. This included writing, reading, and vocabulary acquisition. In examination of who the AIEd was intended for, 72% of the studies focused on students, 17% instructors, and 11% managers.

Grounded coding was used to answer the overarching question of how AIEd was used in HE. Five usage codes emerged from the data: (1) Assessment/Evaluation, (2) Predicting, (3) AI Assistant, (4) Intelligent Tutoring System (ITS), and (5) Managing Student Learning. Assessment and evaluation had a wide variety of purposes, including assessing academic progress and student emotions towards learning, individual and group evaluations, and class based online community assessments. Predicting emerged as a code with ten axial codes, as AIEd predicted dropouts and at-risk students, innovative ability, and career decisions. AI Assistants were specific to supporting students in HE. These assistants included those with an anthropomorphic presence, such as virtual agents and persuasive intervention through digital programs. ITS systems were not always noted in extant systematic reviews but were specifically mentioned in 18 of the studies in this review. ITS systems in this study provided customized strategies and approaches to student’s characteristics and needs. The final code in this study highlighted the use of AI in managing student learning, including learning analytics, curriculum sequencing, instructional design, and clustering of students.

The findings of this study provide a springboard for future academics, practitioners, computer scientists, policymakers, and funders in understanding the state of the field in AIEd HE, how AI is used. It also provides actionable items to ameliorate gaps in the current understanding. As the use AIEd will only continue to grow this study can serve as a baseline for further research studies in the use of AIEd in HE.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Alajmi, Q., Al-Sharafi, M. A., & Abuali, A. (2020). Smart learning gateways for Omani HEIs towards educational technology: Benefits, challenges and solutions. International Journal of Information Technology and Language Studies, 4(1), 12–17.

Al-Tuwayrish, R. K. (2016). An evaluative study of machine translation in the EFL scenario of Saudi Arabia. Advances in Language and Literary Studies, 7(1), 5–10.

Ayse, T., & Nil, G. (2022). Automated feedback and teacher feedback: Writing achievement in learning English as a foreign language at a distance. The Turkish Online Journal of Distance Education, 23(2), 120–139. https://doi.org/10.7575/aiac.alls.v.7n.1p.5

Baykasoğlu, A., Özbel, B. K., Dudaklı, N., Subulan, K., & Şenol, M. E. (2018). Process mining based approach to performance evaluation in computer-aided examinations. Computer Applications in Engineering Education, 26(5), 1841–1861. https://doi.org/10.1002/cae.21971

Belur, J., Tompson, L., Thornton, A., & Simon, M. (2018). Interrater reliability in systematic review methodology: Exploring variation in coder decision-making. Sociological Methods & Research, 13(3), 004912411887999. https://doi.org/10.1177/0049124118799372

Çağataylı, M., & Çelebi, E. (2022). Estimating academic success in higher education using big five personality traits, a machine learning approach. Arab Journal Scientific Engineering, 47, 1289–1298. https://doi.org/10.1007/s13369-021-05873-4

Chen, L., Chen, P., & Lin, Z. (2020). Artificial intelligence in education: A review. IEEE Access, 8, 75264–75278. https://doi.org/10.1109/ACCESS.2020.2988510

Chu, H., Tu, Y., & Yang, K. (2022). Roles and research trends of artificial intelligence in higher education: A systematic review of the top 50 most-cited articles. Australasian Journal of Educational Technology, 38(3), 22–42. https://doi.org/10.14742/ajet.7526

Cristianini, N. (2016). Intelligence reinvented. New Scientist, 232(3097), 37–41. https://doi.org/10.1016/S0262-4079(16)31992-3

Crompton, H., Bernacki, M. L., & Greene, J. (2020). Psychological foundations of emerging technologies for teaching and learning in higher education. Current Opinion in Psychology, 36, 101–105. https://doi.org/10.1016/j.copsyc.2020.04.011

Crompton, H., & Burke, D. (2022). Artificial intelligence in K-12 education. SN Social Sciences, 2, 113. https://doi.org/10.1007/s43545-022-00425-5

Crompton, H., Jones, M., & Burke, D. (2022). Affordances and challenges of artificial intelligence in K-12 education: A systematic review. Journal of Research on Technology in Education. https://doi.org/10.1080/15391523.2022.2121344

Crompton, H., & Song, D. (2021). The potential of artificial intelligence in higher education. Revista Virtual Universidad Católica Del Norte, 62, 1–4. https://doi.org/10.35575/rvuen.n62a1

de Chiusole, D., Stefanutti, L., Anselmi, P., & Robusto, E. (2020). Stat-Knowlab. Assessment and learning of statistics with competence-based knowledge space theory. International Journal of Artificial Intelligence in Education, 30, 668–700. https://doi.org/10.1007/s40593-020-00223-1

Dever, D. A., Azevedo, R., Cloude, E. B., & Wiedbusch, M. (2020). The impact of autonomy and types of informational text presentations in game-based environments on learning: Converging multi-channel processes data and learning outcomes. International Journal of Artificial Intelligence in Education, 30(4), 581–615. https://doi.org/10.1007/s40593-020-00215-1

Górriz, J. M., Ramírez, J., Ortíz, A., Martínez-Murcia, F. J., Segovia, F., Suckling, J., Leming, M., Zhang, Y. D., Álvarez-Sánchez, J. R., Bologna, G., Bonomini, P., Casado, F. E., Charte, D., Charte, F., Contreras, R., Cuesta-Infante, A., Duro, R. J., Fernández-Caballero, A., Fernández-Jover, E., … Ferrández, J. M. (2020). Artificial intelligence within the interplay between natural and artificial computation: Advances in data science, trends and applications. Neurocomputing, 410, 237–270. https://doi.org/10.1016/j.neucom.2020.05.078

Gough, D., Oliver, S., & Thomas, J. (2017). An introduction to systematic reviews (2nd ed.). Sage.

Gupta, S., & Chen, Y. (2022). Supporting inclusive learning using chatbots? A chatbot-led interview study. Journal of Information Systems Education, 33(1), 98–108.

Hemingway, P. & Brereton, N. (2009). In Hayward Medical Group (Ed.). What is a systematic review? Retrieved from http://www.medicine.ox.ac.uk/bandolier/painres/download/whatis/syst-review.pdf

Hinojo-Lucena, F., Arnaz-Diaz, I., Caceres-Reche, M., & Romero-Rodriguez, J. (2019). A bibliometric study on its impact the scientific literature. Education Science. https://doi.org/10.3390/educsci9010051

Hrastinski, S., Olofsson, A. D., Arkenback, C., Ekström, S., Ericsson, E., Fransson, G., Jaldemark, J., Ryberg, T., Öberg, L.-M., Fuentes, A., Gustafsson, U., Humble, N., Mozelius, P., Sundgren, M., & Utterberg, M. (2019). Critical imaginaries and reflections on artificial intelligence and robots in postdigital K-12 education. Postdigital Science and Education, 1(2), 427–445. https://doi.org/10.1007/s42438-019-00046-x

Huang, C., Wu, X., Wang, X., He, T., Jiang, F., & Yu, J. (2021). Exploring the relationships between achievement goals, community identification and online collaborative reflection. Educational Technology & Society, 24(3), 210–223.

Hwang, G. J., & Tu, Y. F. (2021). Roles and research trends of artificial intelligence in mathematics education: A bibliometric mapping analysis and systematic review. Mathematics, 9(6), 584. https://doi.org/10.3390/math9060584

Khalfallah, J., & Slama, J. B. H. (2018). The effect of emotional analysis on the improvement of experimental e-learning systems. Computer Applications in Engineering Education, 27(2), 303–318. https://doi.org/10.1002/cae.22075

Kim, C., & Bennekin, K. N. (2016). The effectiveness of volition support (VoS) in promoting students’ effort regulation and performance in an online mathematics course. Instructional Science, 44, 359–377. https://doi.org/10.1007/s11251-015-9366-5

Koć-Januchta, M. M., Schönborn, K. J., Roehrig, C., Chaudhri, V. K., Tibell, L. A. E., & Heller, C. (2022). “Connecting concepts helps put main ideas together”: Cognitive load and usability in learning biology with an AI-enriched textbook. International Journal of Educational Technology in Higher Education, 19(11), 11. https://doi.org/10.1186/s41239-021-00317-3

Krause, S. D., & Lowe, C. (2014). Invasion of the MOOCs: The promise and perils of massive open online courses. Parlor Press.

Li, D., Tong, T. W., & Xiao, Y. (2021). Is China emerging as the global leader in AI? Harvard Business Review. https://hbr.org/2021/02/is-china-emerging-as-the-global-leader-in-ai

Liang, J. C., Hwang, G. J., Chen, M. R. A., & Darmawansah, D. (2021). Roles and research foci of artificial intelligence in language education: An integrated bibliographic analysis and systematic review approach. Interactive Learning Environments. https://doi.org/10.1080/10494820.2021.1958348

Liu, S., Hu, T., Chai, H., Su, Z., & Peng, X. (2022). Learners’ interaction patterns in asynchronous online discussions: An integration of the social and cognitive interactions. British Journal of Educational Technology, 53(1), 23–40. https://doi.org/10.1111/bjet.13147

Long, P., & Siemens, G. (2011). Penetrating the fog: Analytics in learning and education. Educause Review, 46(5), 31–40.

Lu, O. H. T., Huang, A. Y. Q., Tsai, D. C. L., & Yang, S. J. H. (2021). Expert-authored and machine-generated short-answer questions for assessing students learning performance. Educational Technology & Society, 24(3), 159–173.

Mavrikis, M., Geraniou, E., Santos, S. G., & Poulovassilis, A. (2019). Intelligent analysis and data visualization for teacher assistance tools: The case of exploratory learning. British Journal of Educational Technology, 50(6), 2920–2942. https://doi.org/10.1111/bjet.12876

Moher, D., Shamseer, L., Clarke, M., Ghersi, D., Liberati, A., Petticrew, M., Shekelle, P., & Stewart, L. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews, 4(1), 1–9. https://doi.org/10.1186/2046-4053-4-1

Mousavi, A., Schmidt, M., Squires, V., & Wilson, K. (2020). Assessing the effectiveness of student advice recommender agent (SARA): The case of automated personalized feedback. International Journal of Artificial Intelligence in Education, 31(2), 603–621. https://doi.org/10.1007/s40593-020-00210-6

Mousavinasab, E., Zarifsanaiey, N., Kalhori, S. R. N., Rakhshan, M., Keikha, L., & Saeedi, M. G. (2021). Intelligent tutoring systems: A systematic review of characteristics, applications, and evaluation methods. Interactive Learning Environments, 29(1), 142–163. https://doi.org/10.1080/10494820.2018.1558257

Ouatik, F., Ouatikb, F., Fadlic, H., Elgoraria, A., Mohadabb, M. E. L., Raoufia, M., et al. (2021). E-Learning & decision making system for automate students assessment using remote laboratory and machine learning. Journal of E-Learning and Knowledge Society, 17(1), 90–100. https://doi.org/10.20368/1971-8829/1135285

Ouyang, F., Zheng, L., & Jiao, P. (2022). Artificial intelligence in online higher education: A systematic review of empirical research from 2011–2020. Education and Information Technologies, 27, 7893–7925. https://doi.org/10.1007/s10639-022-10925-9

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T., Mulrow, C., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. British Medical Journal. https://doi.org/10.1136/bmj.n71

Popenici, S. A. D., & Kerr, S. (2017). Exploring the impact of artificial intelligence on teaching and learning in higher education. Research and Practice in Technology Enhanced Learning, 12(22), 1–13. https://doi.org/10.1186/s41039-017-0062-8

PRISMA Statement. (2021). PRISMA endorsers. PRISMA statement website. http://www.prisma-statement.org/Endorsement/PRISMAEndorsers

Qian, Y., Li, C.-X., Zou, X.-G., Feng, X.-B., Xiao, M.-H., & Ding, Y.-Q. (2022). Research on predicting learning achievement in a flipped classroom based on MOOCs by big data analysis. Computer Applied Applications in Engineering Education, 30, 222–234. https://doi.org/10.1002/cae.22452

Rutner, S. M., & Scott, R. A. (2022). Use of artificial intelligence to grade student discussion boards: An exploratory study. Information Systems Education Journal, 20(4), 4–18.

Salas-Pilco, S., & Yang, Y. (2022). Artificial Intelligence application in Latin America higher education: A systematic review. International Journal of Educational Technology in Higher Education, 19(21), 1–20. https://doi.org/10.1186/S41239-022-00326-w

Saldana, J. (2015). The coding manual for qualitative researchers (3rd ed.). Sage.

Shukla, A. K., Janmaijaya, M., Abraham, A., & Muhuri, P. K. (2019). Engineering applications of artificial intelligence: A bibliometric analysis of 30 years (1988–2018). Engineering Applications of Artificial Intelligence, 85, 517–532. https://doi.org/10.1016/j.engappai.2019.06.010

Strauss, A., & Corbin, J. (1995). Grounded theory methodology: An overview. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (pp. 273–285). Sage.

Turing, A. M. (1937). On computable numbers, with an application to the Entscheidungs problem. Proceedings of the London Mathematical Society, 2(1), 230–265.

Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59, 443–460.

Ullah, H., & Hafiz, M. A. (2022). Exploring effective classroom management strategies in secondary schools of Punjab. Journal of the Research Society of Pakistan, 59(1), 76.

Verdú, E., Regueras, L. M., Gal, E., et al. (2017). Integration of an intelligent tutoring system in a course of computer network design. Educational Technology Research and Development, 65, 653–677. https://doi.org/10.1007/s11423-016-9503-0

Vygotsky, L. S. (1978). Mind and society: The development of higher psychological processes. Harvard University Press.

Winkler-Schwartz, A., Bissonnette, V., Mirchi, N., Ponnudurai, N., Yilmaz, R., Ledwos, N., Siyar, S., Azarnoush, H., Karlik, B., & Del Maestro, R. F. (2019). Artificial intelligence in medical education: Best practices using machine learning to assess surgical expertise in virtual reality simulation. Journal of Surgical Education, 76(6), 1681–1690. https://doi.org/10.1016/j.jsurg.2019.05.015

Yang, A. C. M., Chen, I. Y. L., Flanagan, B., & Ogata, H. (2021). Automatic generation of cloze items for repeated testing to improve reading comprehension. Educational Technology & Society, 24(3), 147–158.

Yao, X. (2022). Design and research of artificial intelligence in multimedia intelligent question answering system and self-test system. Advances in Multimedia. https://doi.org/10.1155/2022/2156111

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education—Where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27. https://doi.org/10.1186/s41239-019-0171-0

Zhang, F. (2022). Design and application of artificial intelligence technology-driven education and teaching system in universities. Computational and Mathematical Methods in Medicine. https://doi.org/10.1155/2022/8503239

Zhang, Z., & Xu, L. (2022). Student engagement with automated feedback on academic writing: A study on Uyghur ethnic minority students in China. Journal of Multilingual and Multicultural Development. https://doi.org/10.1080/01434632.2022.2102175

Acknowledgements

The authors would like to thank Mildred Jones, Katherina Nako, Yaser Sendi, and Ricardo Randall for data gathering and organization.

Author information

Authors and Affiliations

Contributions

HC: Conceptualization; Data curation; Project administration; Formal analysis; Methodology; Project administration; original draft; and review & editing. DB: Conceptualization; Data curation; Project administration; Formal analysis; Methodology; Project administration; original draft; and review & editing. Both authors read and approved this manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Crompton, H., Burke, D. Artificial intelligence in higher education: the state of the field. Int J Educ Technol High Educ 20, 22 (2023). https://doi.org/10.1186/s41239-023-00392-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41239-023-00392-8